An in-depth guide to Mistral Large 3, the open-source MoE LLM. Learn about its architecture, 675B parameters, 256k context window, and benchmark performance.

An in-depth guide to Mistral Large 3, the open-source MoE LLM. Learn about its architecture, 675B parameters, 256k context window, and benchmark performance.

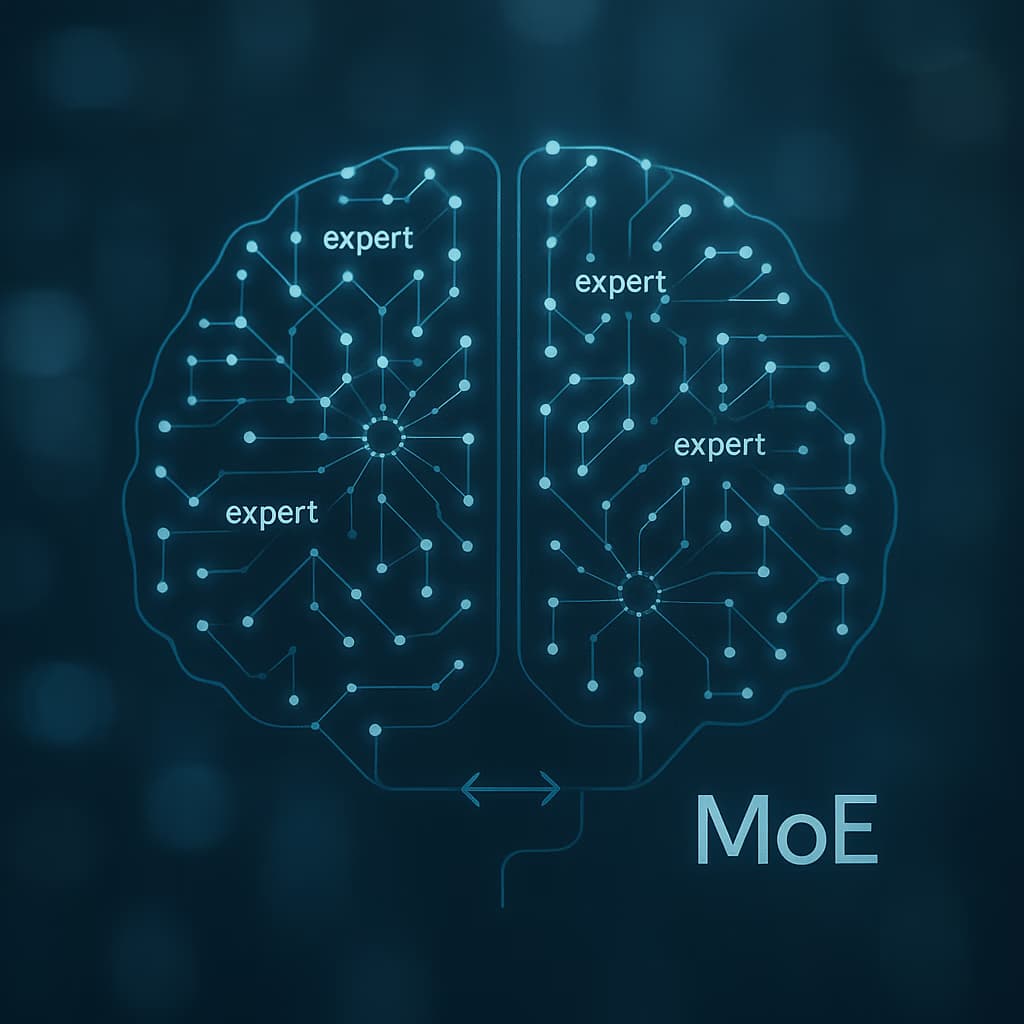

An in-depth technical analysis of Kimi K2, the trillion-parameter LLM from Moonshot AI. Learn about its Mixture-of-Experts (MoE) architecture and agentic AI foc

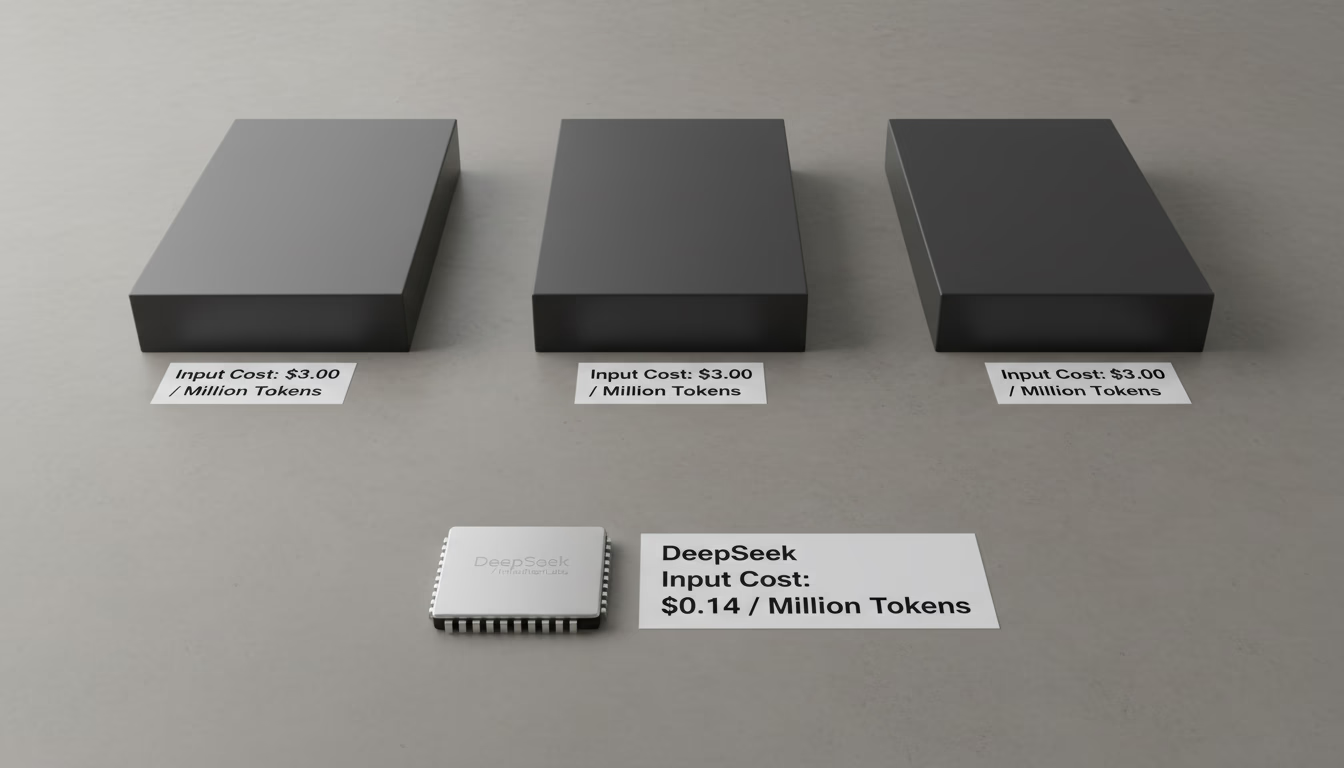

Learn why DeepSeek's AI inference is up to 50x cheaper than competitors. This analysis covers its Mixture-of-Experts (MoE) architecture and pricing strategy.

Explore DeepSeek-OCR, an AI system that uses optical compression to process long documents. Learn how its vision-based approach solves long-context limits in LL

An analysis of GLM-4.6, the leading open-source coding model. Compare its benchmarks against Anthropic's Sonnet and OpenAI's GPT-5, and learn its hardware needs

An overview of IBM's Granite 4.0 LLM family, including its hybrid Mamba-2/Transformer architecture, Nano edge models, Granite Vision, Granite Guardian safety tools, and applications for healthcare AI and data privacy.

An analysis of China's open-source LLM landscape in 2025. Covers key models like Qwen, Ernie, and GLM from major tech firms and leading AI startups.

Comprehensive guide to Mixture of Experts (MoE) models, covering architecture, training, and real-world implementations including DeepSeek-V3, Llama 4, Mixtral, and other frontier MoE systems as of 2026.

© 2026 IntuitionLabs. All rights reserved.