Mistral Large 3: An Open-Source MoE LLM Explained

Executive Summary

Mistral Large 3 is a state-of-the-art, open-weight large language model (LLM) released by French AI startup Mistral AI in December 2025 ([1]) ([2]). It is a sparse mixture-of-experts (MoE) model featuring 41 billion active parameters and a total of 675 billion parameters ([1]) ([2]), with an unprecedented 256,000 token context window. Mistral Large 3 is fully open-sourced under the Apache 2.0 license, marking a major milestone in open AI research ([1]) ([3]). According to official sources, the model was trained from scratch on an exascale NVIDIA GPU cluster (about 3,000 H200 GPUs) and demonstrates frontier-level performance on a wide range of tasks, including general knowledge, multilingual conversation, coding, and multimodal understanding ([4]) ([3]).

Benchmark results reported by independent analyses and Mistral itself show that Large 3 is near the top of open-source models on traditional ML benchmarks. For example, it achieves roughly 85.5% accuracy on MMLU (8-language) ([5]), second-ranked non-reasoning model on the LMArena open-source leaderboard ([6]) ([7]), and excels at code generation (≈92% pass@1 on HumanEval ([8])). At the same time, it lags behind specialized “expert” models on the hardest reasoning benchmarks – e.g. ~43.9% on GPQA Diamond ([9]) – reflecting a design trade-off favoring broad general knowledge and throughput (“System 1” tasks) over extreme multi-step reasoning. Critically, Mistral Large 3 is optimized for cost-efficiency and scalability: through GPU-specific optimizations (NVFP4 quantization, Blackwell Attention kernels etc.) it can run on a single 8× GPU node, with API cost roughly $0.5 per million input tokens ([10]) ([11]).

Mistral Large 3’s open-source release and performance put it in direct competition with proprietary systems. Compared to the very latest closed models (e.g. Google’s Gemini 3 Pro, OpenAI’s GPT-5.x, Anthropic’s Claude 4.5), Mistral L3 offers fewer modalities (text+images only, vs. video/audio in Gemini) and somewhat lower raw scores on the absolute hardest benchmarks ([9]) ([12]), but it unparalleled in openness and deployment flexibility. Table 1 (below) summarizes key comparisons between Mistral Large 3 and leading LLMs. Notably, Mistral Large 3 is licensed Apache 2.0, supports a 256K context window, and ranks #2 among open-source models (only behind Gemini 3 Pro) in LMArena ([13]).

From an enterprise perspective, Mistral has rapidly gained traction. High-profile partnerships include a 2025 multi-year cloud agreement to deploy Mistral models at HSBC ([14]), and evidence of growing adoption in European industry (e.g. BNP Paribas collaboration ([15])). The model’s open nature and on-premise deployment support are seen as key advantages for data-sensitive sectors. Meanwhile, Mistral AI has taken steps to address sustainability (publishing a lifecycle analysis for earlier models ([16])) and is building “Mistral Compute” European infrastructure in partnership with NVIDIA (won praise from President Macron) ([17]).

This report provides a comprehensive, evidence-based overview of Mistral Large 3. Section II reviews Mistral AI’s background and the development of its model family. Section III details the architecture, training, and multimodal design of Large 3. Section IV examines its benchmark performance and efficiency. Section V covers deployment, tooling, and case studies of enterprise use. Section VI discusses implications for the AI ecosystem, including open-source strategy, ethics, and EU tech strategy. Finally, Section VII highlights future directions for Mistral AI and the broader LLM landscape.

Introduction and Background

Large language models (LLMs) have revolutionized AI in recent years, but the field is marked by intense competition between closed-source “frontier” systems (e.g. OpenAI’s GPT series, Anthropic’s Claude, Google’s Gemini) and an emerging open-source ecosystem. Mistral AI, founded in mid-2023 in Paris by former researchers from Google DeepMind and Meta ([18]), quickly positioned itself as a leading European contender. In its first year of existence, Mistral raised hundreds of millions in funding and achieved a valuation in the multi-billion dollar range ([19]) ([15]). Key backers include a16z, Lightspeed, Nvidia, and Salesforce, and the company struck a $16 million deal with Microsoft for GPU resources ([15]). These investments fuelled rapid model development: within months of its founding, Mistral released “Mistral Large,” a ~7B-parameter state-of-the-art LLM (2023) that immediately ranked near the top of benchmarks ([20]).

Building on that momentum, Mistral followed the industry trend towards bigger and more efficient models. Late 2023 saw Mixtral 8×7B, a sparse Mixture-of-Experts (SMoE) model: a set of eight distinct 7B models that collectively outperformed Llama 2 (70B) on many tasks ([21]). In mid-2024, Mistral unveiled Mistral Large 2, a dense 123B-parameter LLM (with 128K context) aimed at code, math, reasoning, and multilingual benchmarks ([22]) ([23]).By 2025, Mistral’s lineup included the “Small” series (e.g. 24B Small 3.2) and specialized models like Magistral (vision+reasoning LLMs) ([24]) ([25]). Mistral’s strategy has consistently emphasized open models with permissive licenses (initially a research license for Large 2 ([26]), and now Apache 2.0 for all Mistral 3 models ([27])) and cost-efficiency (targeting an order-of-magnitude lower inference cost than competitors ([28])).

The launch of Mistral 3 in December 2025 (including the flagship Large 3 model) represented a culmination of these efforts ([1]) ([29]). Officially announced on Dec 2, 2025 ([1]), Mistral 3 introduced a unified model family: a suite of smaller dense models (Ministral-3B, 8B, 14B) and the giant sparse Mistral Large 3. According to Mistral’s announcements, this was their “next generation of open multimodal and multilingual AI” aimed at democratizing frontier AI ([1]). In particular, Mistral Large 3 was touted as “our most capable model to date,” featuring a sparse MoE architecture with 41B active and 675B total parameters ([1]), along with native vision capability and state-of-the-art instruction-following.

Several factors have made Mistral Large 3 noteworthy in 2025: it is fully open-source under Apache 2.0 (a notable contrast with closed models), it has extreme capabilities (256k context, multimodal), and it arrives at a time of strong demand for open LLMs in industry. The model has been integrated into major platforms (Hugging Face, AWS Bedrock, etc.) and accelerated operations (e.g. NVIDIA optimizations ([11])). This report will thoroughly examine everything known about Mistral Large 3—its design, performance, usage, and broader impact—drawing on official documentation, independent analyses, benchmark results, and corporate/academic commentary.

I. Mistral AI and the Evolution of the Mistral 3 Family

Understanding Mistral Large 3 requires context on Mistral AI’s philosophy and product strategy. As a European startup, Mistral positions itself as offering open, transparent, and cost-effective AI as an alternative to Silicon Valley models. Founders publicly emphasize openness: CEO Arthur Mensch (ex-DeepMind) has argued for transparency and has staffed Mistral with top French AI talent ([18]). Mistral’s “open core” approach means all models are released with permissive licenses, encouraging external development and deployment flexibility. This stands in contrast to fully proprietary competitors. For example, Mistral Large 3 and its related models use Apache 2.0 licensing ([1]), whereas contemporaries like GPT-5.1 and Claude 4.5 are closed (see Table 1).

Table 2 (below) summarizes the Mistral 3 series alongside its smaller Ministral variants. The flagship Mistral Large 3 is a sparse MoE LLM with 675 billion parameters (of which ~41B are “active” per token) ([1]) ([30]). The smaller Ministral 3B/8B/14B models are dense transformers, each released with base/instruct/reasoning versions ([31]) ([30]). Notably, all Mistral 3 family models appear to support an extremely long 256K-token context window ([2]) ([30]), enabling them to process entire long documents. All are trained on NVIDIA Hopper GPUs (H100/H200) in partnership with NVIDIA. ([4]) ([25]). The design is inherently multimodal: each model has native image-understanding capability built in (via an integrated vision encoder) ([32]) ([33]).

| Model | Architecture | Total Params | Active Params | Context (tokens) |

|---|---|---|---|---|

| Mistral Large 3 | Sparse Mixture-of-Experts | 675 billion | 41 billion | 256,000 |

| Ministral 14B | Dense Transformer | 14 billion | 14 billion | 256,000 |

| Ministral 8B | Dense Transformer | 8 billion | 8 billion | 256,000 |

| Ministral 3B | Dense Transformer | 3 billion | 3 billion | 256,000 |

Table 2. Specifications of the Mistral 3 model family. All models are open-sourced under Apache 2.0, and were trained on NVIDIA Hopper GPUs ([4]) ([30]). The “active” parameter count refers to how many parameters are used per token during inference.

Mistral’s development timeline: The Mistral 3 family follows a succession of earlier releases. In July 2024, Mistral Large 2 (123B dense, 128K context) was announced with improved reasoning, math, coding, and multilingual capabilities ([22]). Prior to that, Mistral’s 2023 models (Large 7B, the Mixtral 8×7B ensemble, etc.) established its early reputation for efficiency ([21]) ([20]). In mid-2025, Mistral also introduced vision-oriented “Magistral” models and updated its Smaller and Medium series. In this sequence, Mistral Large 3 represents the apex of scale and capability at end-2025. Its architecture (return to MoE) draws on Mistral’s past MoE experimentation (Mixtral) but at a much larger scale. According to Mistral, Large 3 was trained from scratch, not as a fine-tune of prior models ([34]), and was optimized for instruction-following at release time. A dedicated “reasoning” version of Large 3 is promised in the future to further enhance chain-of-thought.

Mistral’s corporate trajectory also frames the context. By late 2025 the company was valued near $10B (reports) and had formed strategic partnerships to support infrastructure. At Vivatech 2025, President Macron touted a French/Nvidia collaboration (“historic partnership”) to build a sovereign European AI compute platform (Project “Mistral Compute”) that will include 18,000 Nvidia processors ([17]). This underscores Mistral’s dual role: developing models and building Europe’s AI infrastructure. Moreover, major firms have started integrating Mistral’s technology: for instance, Reuters notes a strategic partnership with HSBC, giving the bank access to Mistral’s LLMs to improve productivity and customer service ([14]) (discussed in detail later). These adoption cases indicate that Mistral’s open LLMs, including Large 3, are already impacting industry.

In summary, Mistral Large 3 emerges from a rapid wave of innovation at Mistral AI, underpinned by deep-pocketed funding and strategic alliances. It reflects the company’s founding philosophy (open, high-performing, cost-effective AI) and Europe’s ambition to have sovereign, cutting-edge AI capabilities ([17]) ([35]). The rest of this report examines all aspects of Mistral Large 3 in detail.

II. Architecture and Training

Mixture-of-Experts design. Mistral Large 3’s core innovation is its sparse MoE architecture. Instead of a single monolithic transformer, it contains multiple “expert” sub-networks, with a routing mechanism that activates only some experts per token. Specifically, Large 3 has ~675B total parameters distributed across its experts, but only about 41B parameters are active on any given token ([36]) ([1]). That ~16:1 ratio (675/41) is built into the model’s construction ([37]). The advantage is dramatic: the model can store the knowledge capacity of hundreds of billions of parameters while keeping inference cost on par with a 40–50B dense model ([37]).

Mistral’s official documentation describes the architecture simply as a “granular Mixture-of-Experts” ([2]). More detailed accounts (e.g. independent reviews ([36])) note that the routing network selects a subset of experts per token, minimizing FLOPs. NVIDIA engineers further explain that Large 3 was built with optimized Blackwell attention and MoE kernels to fully leverage the GB200 NVL72 system ([11]) ([38]). In baseline terms, Large 3’s transformer layers are deeper and wider than typical 50–100B models, but thanks to MoE routing, each forward pass only engages a fraction of the network.

Training regimen. According to Mistral and NVIDIA, Large 3 was trained from scratch on a massive GPU cluster. Mistral’s announcement and independent reports agree that 3,000 NVIDIA Hopper H200 GPUs were used for training ([4]) ([34]). This scale of training (with HBM3e memory) is on par with other frontier models. Mistral’s team collaborated closely with NVIDIA: all Mistral 3 models were trained on Hopper accelerators ([4]) ([25]) to exploit the latest memory bandwidth. No existing model weights were reused—Large 3 is a fresh pretraining effort. The company also partnered with open-source framework developers: they released the checkpoint in NVFP4 format (using the llm-compressor tool) to support efficient inference ([11]).

Details on training data are scarce. As a general-purpose LLM, Large 3 presumably trained on vast multilingual text corpora (common to all large LMs), and also on image-text pairs (given its vision capabilities). Mistral does not publicly disclose its dataset composition, which is common practice. However, given the model’s strong multilingual performance, it likely included diverse sources (Web text, books, code, etc. in many languages) in training. Mistral’s own statements claim parity with the best open models on “general prompts” after training ([4]), indicating a broad, mixed corpus. Unlike some “closed” LLMs, Mistral’s open nature suggests no secret saturation of proprietary data.

After initial pretraining, Mistral Large 3 was fine-tuned for instruction following (the release included an instruction-tuned version ([39])). The deployable models include “base” (un-tuned) and “instruct” variants immediately; a specialized “reasoning” variant (with perhaps more chain-of-thought) is promised soon ([39]) ([40]). Post-training, the model achieved high scores on general NLP and conversation tasks. Notably, internal (and reported public) benchmarks showed Large 3 achieving parity with the best instruction-tuned open systems ([4]) ([33]).

Hardware and precision. A key design goal was to make Large 3 accessible to users without enormous compute. To that end, the model supports low-precision formats: NVFP4 (an 8-bit scheme) for SUMD Blackwell GPUs, FP8/FP16 for others ([41]) ([42]). Large 3 runs inference efficiently on NVIDIA’s NVL72 (GB200) with TensorRT-LLM and vLLM support ([11]). Thanks to these optimizations, the full 675B-parameter model can be hosted on a single modern 8×GPU node (e.g. 8×H100) without need for cumbersome inter-node tensor parallelism ([41]). Mistral collaborated with Red Hat and vLLM to release an NVFP4-format checkpoint for use on 8×H100 or A100 via vLLM ([11]). These engineering details mean that despite its scale, Large 3 does not necessarily require a multi-node supercluster for inference.

Multimodal integration. Unlike many text-only LLMs, Mistral Large 3 is explicitly multimodal. From the architecture, it “fuses” a vision encoder (~2.5B parameters) into the model ([32]). In other words, image understanding is native rather than an external adapter. This enables robust handling of images, PDFs, diagrams, etc., all within a unified model. The official blog and analysis highlight that the vision encoder allows Mistral 3 to perform OCR and structured document Q&A directly ([43]) ([32]). Experimentally, Mistral’s documentation offers a live OCR endpoint in its API for Large 3 ([44]). The tight integration means no separate vision-language interface is needed. In practical terms, this yields capabilities such as layout-aware document comprehension and information extraction from images.

Features and functionality. Mistral Large 3 retains the full suite of modern LLM capabilities. According to documentation, it supports chat completions, multi-turn “agent” style workflows, function-calling, structured output, and fill-in-the-middle (code completion) ([45]) ([46]). Its API includes endpoints for text chat, function calling, embedded tool use, moderated outputs, OCR, audio transcription, etc. ([45]). In effect, it can serve as a backbone for conversational AI, RAG (retrieval-augmented generation), code assistants, and multi-modal agent applications. These built-in tools make it a generalist “platform model” suitable for enterprise integration.

In summary, Mistral Large 3’s architecture is a cutting-edge sparse MoE transformer with an enormous parameter count but focused compute per token. It was trained on dedicated NVIDIA GPU clusters with multi-modal data, and engineered (with partners NVIDIA, Red Hat, vLLM) for high throughput and low-cost inference ([11]) ([42]). The result is an open-source model that rivals proprietary giants in capability while remaining relatively accessible to the open community.

III. Benchmarks and Capability Analysis

Evaluating Mistral Large 3 involves both synthetic benchmarks and real-world tasks. We break down its strengths and weaknesses across different domains:

General knowledge and language. Mistral Large 3 is a top-tier performer among open models on broad NLP benchmarks. For instance, on a balanced multilingual MMLU test (covering science, humanities, etc.) Large 3 achieves around 85.5% accuracy ([47]). This matches or approaches the best open models. It is comparable to closed models on general tasks: DataCamp reports that Large 3 “outperforms” its open-source peers (e.g. DeepSeek-3.2, Kimi-K2) on important benchmarks ([48]), and Neuromatch style analyses indicate it sits near the top of the open leaderboard. In crowd-sourced evaluations (e.g. LMSYS Chatbot Arena), Mistral Large 3 heralded with an Elo score ≈1418, placing it #2 among open-source non-reasoning models (and #6 overall) ([49]) ([7]). These results suggest that for everyday inquiries, summarization, translation, and conversation, Large 3 is broadly reliable.

However, on the hardest “system-2” reasoning tasks, Large 3 shows a noticeable gap compared to specialized models. On GPQA Diamond (a challenging graduate-level reasoning benchmark resistant to fact-matching), Large 3 scores only about 43.9% ([9]), whereas specialized open “agentic” models like DeepSeek-v3.2 and Kimi K2-Thinking are in the 70–85% range ([9]). Similarly, on advanced math contests (MATH/AIME style) Large 3 is good but not record-setting: internal reports (e.g. on AIME ’25) indicate mid-90s percentile performance, which is solid but below some Chinese models focused on math ([50]). In summary, Large 3 is a competent generalist with strong knowledge retrieval, but it is not finely tuned for extended chain-of-thought reasoning. Its designers appear to have optimized for throughput and broad coverage rather than maximum reasoning depth, an approach consistent with “System 1” pattern-matching behavior ([51]). This is also evident in its high LMArena Elo: it wins head-to-head on typical prompts, but specialized reasoning models (like DeepSeek or Google’s Pathfinder series) take the lead on formal logic.

Multilingual and translation performance. Large 3 is explicitly multilingual. Mistral AI reports that it has strong capabilities in 40+ languages ([52]) ([53]). Anecdotally, reviewers have tested its translation abilities. For example, a DataCamp author gave Large 3 a real Arabic screenshot transcription and multi-step troubleshooting prompt; the model handled Levantine dialect correctly in most steps, even though it made some translation anomalies ([54]) ([55]). Experts note the model’s native support for dozens of languages and coding languages ([56]) ([57]). Benchmarks like multilingual MMLU show that Large 3 maintains high accuracy across languages, coming in second only to far larger closed models like LLaMA 3.1 405B on a multilingual MMLU test ([58]). In practice, Large 3 is well-suited for global applications (multilingual chatbots, support desks, document processing).

Coding and software tasks. The Large 3 model is strong at coding tasks, reflecting its large context and broad training data. Independent testing reports around 92% pass@1 on HumanEval (Python) ([8]), placing it among the highest open-source models on classic coding benchmarks. Its code output is described as “clean” and modular ([59]). However, on more modern and challenging coding suites (e.g. LiveCodeBench, which includes complex or anti-corruption tasks), Mistral L3 ranks in the second tier. It performs slightly behind the best code-specialist models (where open experts exceed 80% on HumanEval), and trails behind highly optimized agentic systems on cutting-edge tasks ([59]). In other words, Large 3 is an excellent general coding assistant for common problems, but it is not (as of now) the absolute top performer on niche competitive coding. Notably, Mistral AI also released a specialized “Codestral” model (not the subject here) targeting code tasks.

One advantage of Large 3’s code ability is its long context. It can understand entire repositories and do sophisticated code editing via its fill-in-the-middle (FIM) functionality ([44]). In benchmarks like CodeContests or Codeforces (SWE-bench), Mistral L3 achieves solid Elo scores (e.g. ~1487 WebDev Elo ([60])), reflecting strong performance in software-related tasks. Its code generation is aided by built-in JSON output and function calling (native to its generation API, per [42†L29-L34]). These features mean developers can use Large 3 for automated scripting, code explanation, and refactoring tasks in enterprise workflows.

Conversation and alignment. As an instruction-tuned chat model, Mistral Large 3 is described as having a “natural, coherent conversational style” ([61]). Its training included efforts to reduce inconsistencies and maintain friendly tone. On crowdsourced chat tests (Arena, Chatbot battles), it wins a majority of prompts against other openings ([49]). Independent human evaluations suggest it is quite helpful and creative on typical support/advice queries. However, its alignment (safety/hallucination) is a noted weakness in some assessments. A “SimpleQA” factual accuracy test reports a relatively low score (~23.8%), implying it sometimes hallucinates confidently ([62]). This is a common problem for LLMs, but it appears Mistral L3 is more prone to hallucination than some peers. The company emphasizes that the model recognizes limits (e.g. returning “I don’t know” when unsure) and fine-tuned Large 2 to minimize false statements ([63]). For Large 3, no formal reports of truthfulness are public yet, but users should be aware of standard LLM pitfalls. Because it is open, organizations can mitigate this risk by augmenting Large 3 with retrieval or tool constraints. Indeed Mistral’s API supports plugging in external knowledge sources at runtime.

Efficiency and compute performance. Large 3’s design yields a good efficiency profile for its class. Inference benchmarks (on GPU hardware) place it as comparatively fast. For example, data from NVIDIA show that on their GB200 NVL72 system, Large 3 with NVFP4 quantization can push well into the multi-million tokens/sec per megawatt range (far exceeding predecessor H200) ([64]) ([65]). External tests report that the smaller 8B Ministral model (for comparison) can do ~50–60 tokens/sec on a single high-end GPU ([66]) – suggesting Large 3 (on 8×GPU) could be roughly comparable to other 100B+ models on a cluster. A key metric is token efficiency: Mistral claims that their models often require far fewer generated tokens to accomplish tasks compared to competitors ([67]). In one example, a real-world prompt generated 1.7× fewer tokens on a Mistral model than on a competitor, implying lower usage cost. While raw inference steps are heavier (41B flops vs ~2B for small models), the MoE and optimized kernels keep latency decent.

Overall, Mistral Large 3 sets a new bar for open LLMs. On broad benchmarks it is competitive with closed models, and it surpasses peers on code and general tasks. However, on specialized reasoning and factual precision tasks, it yields to more tailored systems ([9]) ([68]). The design choices (focus on throughput and multimodal/general ability) reflect Mistral’s goal of a versatile enterprise-grade generalist, rather than a narrow expert. Future “reasoning” variants, as promised, may address some of these gaps.

IV. Real-World Use Cases and Case Studies

Enterprise adoption. Early indicators suggest major enterprises are integrating Mistral’s models into operations. On December 1, 2025, HSBC announced a multi-year strategic partnership with Mistral AI to deploy generative AI broadly across the bank ([14]). Under this deal, HSBC will have access to Mistral’s current and future LLMs and collaborate on tools to enhance productivity. HSBC already reports 600 internal AI use cases and 20,000 developers using AI coding tools; it plans to expand AI into credit processing, customer onboarding, fraud detection, AML, and more ([69]). Both HSBC and Mistral emphasize responsible deployment, focusing on data privacy and transparency ([70]). HSBC’s CIO noted that AI’s long-term potential is enormous and such partnerships lay groundwork for safe, bank-wide AI systems ([71]).

Another example is BNP Paribas, a major French bank. Public reports indicate BNP Paribas formed a collaboration with Mistral (while Mistral was still in its early days) to apply AI in banking ([15]). The exact use cases are not fully detailed in open sources, but such partnerships typically aim at knowledge management, document automation, and customer service chatbots. Mistral’s technology is well-suited to these areas. Similarly, insurance, retail, and manufacturing firms have shown interest. The core attractor is that Mistral offers an advanced LLM without vendor lock-in; companies can self-host models or use Mistral’s API without dependencies on U.S. tech giants ([72]).

Mistral’s Chatbot (Le Chat). In mid-2025, Mistral launched Le Chat Enterprise, an AI chatbot platform for corporate customers ([73]). Le Chat integrates the Mistral LLMs (including likely Large 2/3) into enterprise apps like SharePoint and Google Drive ([73]). Within 100 days, Mistral tripled its revenue, driven by demand from Europe and non-U.S. markets ([74]). The company explicitly touts that clients can deploy the Mistral chatbot on their own hardware (reducing reliance on U.S. cloud providers) ([75]), a major selling point for privacy-conscious organizations. The success of Le Chat underscores that Mistral’s core LLM technology, including Large 3, is already migrating from research to production.

Vision and Robotics (Helsing alliance). Mistral has also targeted industrial and defense applications through partnerships. In February 2025, it teamed with European robotics/vision firm Helsing to develop AI models for “vision-language-action” tasks (e.g. processing video and controlling drones) ([24]). This alliance produces specialized models, but it reflects Mistral’s emphasis on multimodal AI (Large 3 can process images) and on European technology sovereignty. While not specific to Large 3, it illustrates real-world domains (drone control, automated surveillance, etc.) where Mistral’s multimodal LLMs could be applied. The public statements emphasize that small, efficient European models can compete with U.S./Chinese output ([35]), and that open-source customization will be key.

Developer ecosystems and third-party services. Mistral Large 3 has been rapidly integrated into various AI platforms. It is available through Mistral’s own cloud platform (Mistral AI Studio), major cloud providers (AWS Bedrock ([76]), Azure, IBM WatsonX), and ML marketplaces (Hugging Face) ([76]). Additionally, startups like Modal, OpenRouter, Fireworks, and Together AI have added Large 3 support ([76]). This broad accessibility means that organizations can use Large 3 via APIs, self-hosting, or third-party services. For example, on Hugging Face one can download models or deploy them on Hugging Face Hub. The AWS and Azure integrations allow businesses to use Large 3 within their existing cloud workflows. In the open-source community, Mistral 3 models were quickly ported to efficient inference engines: e.g. community builds of Mistral L3 in GGUF format (quantized) are already circulating on Hugging Face and used in Llama.cpp and vLLM.

Regulatory and strategic context. Europe’s emphasis on controlling AI technology has created demand for home-grown models. President Macron’s comments on Mistral-Nvidia collaboration highlight that this project should reduce European dependence on U.S. tech ([17]). Companies like Orange or Thales have signaled interest in on-shore AI infrastructure ([77]). Mistral Large 3, in this context, is part of a push to build European AI “sovereignty.” EU governments and industry leaders have publicly extolled Mistral as a national champion. For instance, the French government and large enterprises often cite Mistral in AI strategy discussions ([17]) ([35]). Partly due to regulatory inertia, European firms prefer to rely on Mistral’s open models that can be heavily audited and controlled, rather than opaque U.S. systems. This has translated into concrete commitments (data center projects, licensing discussions like with MGX ([19]), etc.).

Summary of use-case findings. In practice, Mistral Large 3 and its family are being positioned as an enterprise AI platform. The HSBC case study in banking illustrates priorities like knowledge retrieval, coding assistance, and fraud detection ([78]). Other sectors (defense, telecom, insurance) have parallel needs (document analysis, multimodal data fusion, customer chatbots). Early results suggest Large 3 performs well on typical enterprise tasks: handling foreign-language customer queries, automating coding utilities, summarizing lengthy reports, etc. However, Mistral advises that the model should be seen as one tool in a pipeline. For example, to mitigate the hallucination issue, users often combine Large 3 with retrieval-augmented generation (RAG) systems or tool loops.

Overall, the real-world deployments of Mistral Large 3 remain in early stages (the model is only just released). But the momentum is clear: major organizations are preparing to adopt it, and initial feedback emphasizes its accessibility (open license, on-premise option) and cost advantages as key differentiators in practice ([71]) ([75]). These factors will only grow in importance as AI goes mainstream in regulated industries.

V. Performance in Detailed Domains

The figure of merit for any LLM is how it performs on specific tasks. Here we delve deeper into Mistral Large 3’s empirical strengths and shortcomings, supported by quantitative data and expert assessments.

A. Natural Language Understanding and Generation

On comprehensive language understanding tests, Mistral Large 3 consistently ranks among the top open models. The LLMSlab (LMArena) leaderboard assigns Open-Source LLMs Elo scores from human ratings. Mistral L3 debuted with ~1418 Elo points ([49]), making it #2 among non-reasoning models (open source) at publication time. In absolute terms, it ranked #6 among all open-weight models ([6]) ([7]). This placement is significant: it indicates Large 3 often outperforms or ties with previous open leaders like Llama 3.1 (Maverick) and Claude 3.5 Sonnet, and trails only behind cutting-edge closed models (Gemini 3 Pro, GPT-4o).

MMLU (Massive Multitask Language Understanding) is another benchmark. On an 8-language variant, Large 3 scores ~85.5% ([47]). For context, this is close to GPT-4’s reported performance and better than most open models. On the MMLU-Pro subset (a harder variant), Large 3 still beats 80%, indicating solid reasoning under standard conditions ([47]). DataCamp also notes that Large 3 outperforms Kimi-K2 and Deepseek-3.1 on general benchmarks ([48]). On simpler QA benchmarks, sources suggest Large 3 is very strong: it likely tops open QA leaderboards. However, on trickier fact checks, Mistral L3 has room for improvement: independent tests found a low "SimpleQA" score (around 24%) ([62]), meaning it often confidently fabricates plausible answers when facts are missing. This weakness is typical of large LMs, but the magnitude suggests caution; that said, Mistral’s documentation indicates the model was trained to say “I don’t know” more often when uncertain ([63]), which should somewhat limit blatant errors. Overall, Mistral L3’s natural language abilities appear exceptional for an open model: it reads and generates fluent text, follows lengthy instructions coherently (thanks to its 256K context), and supports many languages with minimal prompting.

B. Multimodal and Visual Tasks

As noted, Large 3 integrates a vision encoder. Its proficiency with images can be assessed through tasks like image captioning, visual question answering, and document OCR. While we lack standardized benchmarks for Mistral vis-à-vis other models in image tasks, anecdotal evidence is promising. Reviewers have performed end-to-end tests: for example, Mistral was given a screenshot containing Arabic text (an error code) and asked to translate and generate a troubleshooting guide. The model successfully output coherent translations in both English and Levantine Arabic ([54]) ([55]). This shows accurate OCR (reading the error message), contextual understanding (concept of a satellite dish issue), and back-translation in dialect. In these experiments, Large 3’s image-reading was on par with expectations: it correctly read almost all Arabic characters, with only a few minor mistakes (e.g. confusing similar letters) ([79]). It then performed a complex multi-step task, suggesting that the vision+language integration works end-to-end. Such tests, albeit anecdotal, echo Mistral’s claims about “layout-aware document understanding” ([32]).

For competitive comparison, Google’s Gemini and other multimodal models tout video and audio inputs, which Mistral L3 does not natively support (it handles static images only). But on image & text moderation or Q/A tasks, Large 3 should be highly capable. Its architecture being tight, it doesn’t degrade on conditioned tasks. In short, Mistral Large 3 is (according to its designers) state-of-the-art for multimodal open models. It should excel at scenarios like analyzing diagrams, indexing scanned PDFs, and assisting with image-based inquiries. Again, detailed benchmarks (like COCO captioning scores) are not published yet, but the integrated design bodes well. For raw performance, the NVIDIA blog suggests that the vision encoder is 2.5B of the total 675B, implying roughly 673B language parameters with 2.5B visual parameters ([32]). This lean integration suggests minimal overhead for vision tasks.

C. Code and Software Engineering

Mistral Large 3 was not specifically billed as a “coding specialist,” but it nonetheless shows excellent coding skills consistent with a 75–125B-sized LLM. Benchmarks illustrate this:

- HumanEval (Python): Mistral L3 reaches ~92% pass@1 ([8]). This means it solves most straightforward coding problems correctly, a performance rivaling top open models. In comparative terms, that is near the state-of-the-art (GPT-4/Claude 3.5 reportedly score similar or slightly higher). The code it generates tends to be clean, idiomatic, and relatively compact ([59]).

- LiveCodeBench / CodeContests: On newer, contamination-resistant coding tests (e.g. LiveCodeBench v6), Mistral L3 lands in the second tier. While it performs well on many tasks, it falls short of the best open code models (which hit ~80%+) ([80]). It also lags behind agentic LLMs that iteratively code/debug.

- Multilingual coding: Mistral’s proficiency extends to many programming languages. It was likely trained on 80+ languages in code corpora ([81]). On multilingual code benchmarks, Mistral L3 ranks near the top open models. DataCamp notes L3 is currently “the top open-source coding model on the LMArena leaderboard” ([82]). Another analysis shows Large 3 performing comparably with large models on Google’s coding challenge benchmarks (SWE-Bench) ([83]). The DataCamp comparative table even lists Mistral L3 as “Strong multilingual” in coding ([84]).

- Coding context: The 256K context is a huge advantage for code tasks. Large programs (multi-file code, large repos) can be processed in one go. Mistral supports prefix-completion and fill-in-the-middle (FIM) for coding, letting it act as a multi-step coding assistant. For large projects, one can feed entire files or documents, which many LLMs cannot handle.

In real-world software development use cases, Mistral Large 3 would serve as a highly capable assistant for code comprehension, generation, and explanation. It can generate code with fewer tokens than some competitors (maintaining brevity). A DataCamp experiment found that Large 3 completed a coding task with focused output, indicating efficient instruction obeyance ([85]). Developers aiming to reduce token costs or run models on-device will appreciate this efficiency. However, for the absolute toughest challenges (e.g. adversarial programming competitions), specialized models might perform better.

D. Mathematical and Reasoning Abilities

On standard math benchmarks (GSM8K, MATH), Mistral Large 3 demonstrates strong but not record-breaking ability. Official and third-party sources indicate:

- GSM8K (arithmetic word problems): Mistral L3 likely scores in the high 70s or low 80s accuracy (specific figures are not public). This is consistent with “mid-90s percentile on internal math benchmarks” reported by cloud catalogs ([50]).

- Contest math (MATH/AIME): Mistral’s performance is good but behind state-of-art. For instance, at AIME 2025, its 14B variant solved 85% of problems ([86]), which is extremely high for that parameter range, but specialized Chinese LLMs and large hybrids have been pushing into 90+% or perfect scores. On the hardest MATH subset tasks, Large 3 tends to reach top-10 among open models but not #1.

- Comparison to peers: On math, Mistral L3 clearly surpasses its predecessor Large 2 (thanks to more scale and MoE), but closes the gap with Google’s proprietary models (“GPT-4o scores ~GPT-4”, “Gemini 3 Pro scores 91.9% on GPQA Diamond” ([68])). Officially, Gemini 3 Pro reaches 91.9% on GPQA Diamond and 1501 Elo ([68]), compared to Large 3’s ~1418 Elo. GPT-5.1 and Claude Opus 4.5 reportedly excel on math and reasoning (GPT-5.1 “improved AIME/Codeforces” ([13]), Claude scores ~80.9% on Codeforces bench). Thus, while Mistral L3 is in the upper tier, it is not the outright champion on pure math tasks.

Importantly, Mistral Large 3’s design does allow enhanced reasoning via tool usage. Its architecture supports function-calling and chain-of-thought (through its “reasoning” variant pipeline). The company has indicated that a reasoning-optimized variant of Large 3 is forthcoming, which likely will boost these domain scores. For now, we note that on tasks requiring iterative logic, Large 3 is reliable but cautious. It is more oriented to direct recall and pattern matching than to “deep thinking.” This has pros and cons: it means fewer hallucinations in general knowledge tasks, but it also limits performance on multi-step puzzles ([51]).

Summary of Performance Metrics

A high-level summary of Mistral Large 3’s performance is captured in Table 1 (from DataCamp) and our above analysis:

| Benchmark Category | Mistral Large 3 | Top Competitors | Notes |

|---|---|---|---|

| LMArena Elo (OSS) | ~1418 (#2 open) ([49]) | Gemini 3 Pro ~1501 Elo (<a href="https://www.datacamp.com/blog/mistral-3#:~:text=Top%20Benchmark%20%20%7C%20,%245%2F%2425%20per%20M%20tokens" title="Highlights: Top Benchmark | ,$5/$25 per M tokens" class="text-gray-400 text-[10px] hover:text-gray-500">[83]) (1st open) |

| MMLU (multilingual) | ~85.5% accuracy ([47]) | ~90% (GPT-4/G3) | Comparable to the best open models, slightly below top proprietary. |

| GPQA Diamond (hard reasoning) | ~43.9% ([9]) | ~78–85% (DeepSeek, Kimi Thinking) | Special reasoning models score far higher, showing Large 3 is not top in deep chains. |

| HumanEval (code) | ~92% pass@1 ([8]) | Similar to GPT-4o (95%+) | Among top open models; slight behind GPT-4o. |

| LiveCodeBench (code) | 2nd tier (not quantified) | ~80%+ (code-specialist) | Strong generalist coding, below code champions. |

| LLaMA Fine (coding) | 7th/8th (approx) ([8]) | 1st–2nd (GPT-4o, Claude) | Competent but not champion on fresh code tasks. |

| Task completion (Arena) | Very reliable, Elo ~1418 ([49]) | GPT-4o, G3 Pro higher | Consistent, helpful responses in chat; weaker on core facts. |

| Hallucination (SimpleQA) | ~23.8% ([62]) | Claude, GPT-5 very high | More hallucination-prone than some (a notable weakness). |

| Multimodal (image tasks) | Strong, native VQA/OCR | Supports text+image; Gemini adds video/audio | Capable on image+text, but does not handle video/audio. |

| Context length | 256K tokens ([2]) | 272K (GPT-5.1), 1M (Gemini 3 Pro) ([87]) | Extremely long, larger than GPT-4's 128K; opens new use cases. |

| API Cost | ~$0.5 in / $1.5 out per 1M tokens ([10]) | GPT-4o: ~$2/$12 (per 1M) ([88]) | Roughly 80% cheaper than GPT-4o ([88]). |

Table 1. Comparison of Mistral Large 3 with leading LLMs. “Open-source” (OSS) Elo from LMArena refers to open-weight models only. Source: DataCamp ([13]), NVIDIA ([30]), and independent analyses ([8]) ([62]). (See text citations for details.)

From Table 1 and the above discussion, the picture is clear: Mistral Large 3 is a top-tier open LLM, excelling in generalist tasks and code, with a unique open license and very large context. In absolute peak performance it slightly trails the very latest closed models on some specialized benchmarks, but it closes the gap significantly. Critically, its price/performance ratio (both token efficiency and API cost) is very attractive ([67]) ([88]).

VI. Deployment, Infrastructure, and Ecosystem

A powerful model is only as useful as its accessibility and integration into real systems. Mistral Large 3 has been engineered and positioned for broad deployment across different hardware and software stacks.

A. Hosting, Serving, and Cost

Mistral AI offers Large 3 via multiple channels. On the cloud side, its flagship product Mistral AI Studio provides an easy API to call any Mistral 3 model. Amazon (AWS) Bedrock now supports Mistral Large 2 (and presumably L3 soon) ([89]), and Microsoft’s Azure and IBM WatsonX platforms similarly announced Mistral integration. Hugging Face hosts both base and instruct checkpoints for Large 3, accessible through HF’s API or for download ([76]). Companies like Modal or OpenRouter provide managed API endpoints with High Availability for Large 3 as well. This means organizations can deploy Large 3 as a fully-managed cloud service, similar to any other foundation model.

For on-premise or local deployment, Mistral Large 3 can be self-hosted. Thanks to the NVFP4-optimized checkpoint produced by llm-compressor ([11]), a single node with 8×H100 or 8×A100 GPUs can run the full model. Nvidia’s technical blog confirms that Mistral Large 3 is deployable on NVIDIA’s GB200 NVL72 systems (up to 8×Blackwell GPUs) with full feature support ([11]) ([42]). In practical terms, this means an enterprise with a multi-GPU server can run Large 3 behind their firewall, retaining full data control. NVIDIA and Mistral also collaborated on a vLLM-based solution to run Large 3 efficiently on 8×A100, which manufacturers like Lambda, CoreWeave, etc. are adopting. Additionally, community ports (like llama.cpp or vLLM) allow running Large 3 in lower precision on lower-cost hardware, albeit with performance trade-offs. The key point is that Large 3 no longer requires battalions of GPUs or complex parallelism; it is engineered for practicality. That practicality is aided by variable quality-of-service options: Businesses can pay to use the high-precision GB200 NVL72 path for super-low latency, or use lower-cost quantized A100 inference for batch jobs.

The cost of inference is heavily advertised as an advantage. Mistral’s internal pricing for Large 3 is about $0.50 per 1M tokens in, $1.50 per 1M tokens out (caller and chat pricing) ([10]). By comparison, OpenAI’s GPT-4o mini (and others) cost roughly 10–20× more per token ([88]). This price difference comes from both architectural efficiency and business strategy (Mistral aims to be affordable). Independent commentary notes that Large 3’s cost per token is ~80% lower than GPT-4o ([88]). For enterprises, this dramatically lowers the barrier to running large-scale AI services. At HSBC, for example, their 20,000 AI developers will incur far lower operational costs if they use Mistral’s models. Even for self-hosting, the GPU-time per request is lower due to MoE sparsity optimizations ([37]).

B. Software Ecosystem and Developer Tools

Mistral Large 3 is integrated into the wider ML software ecosystem. Officially supported frameworks include vLLM (for high-throughput Python serving) and NVIDIA’s TensorRT-LLM (for optimized GPU execution) ([11]) ([90]). The NVIDIA blog confirms support for vLLM (with NVFP4), SGLang, TensorRT-LLM, and also popular open tools such as LLaMA.cpp and Ollama ([90]). In fact, the table at [47] shows that LLaMA.cpp (a CPU/mobile inference library) supports the 3B/8B/14B Ministral models (and presumably could support quantized L3 as well), while Ollama (an AI local deploy framework) supports all through either NV or CPU modes ([90]). In short, developers can run Large 3 either via cloud APIs or as self-hosted instances on NVIDIA hardware or even on some CPU/edge devices (for the smaller models).

The Mistral API (like OpenAI’s) provides advanced features. It supports function calling (invoking external tools or code) and structured JSON outputs ([46]) ([81]). It also allows prefix generation and “fill-in-the-middle” which is useful for code editing tasks. These built-in capabilities are essential for building intelligent agents or multi-tool applications. Mistral’s docs mention endpoints for chat, tool orchestration, OCR, and even audio transcription ([45]) (the last likely via separate vocoder models in the Mistral ecosystem). For example, a developer could use the chat/completions endpoint combined with audio/transcription to build a voice assistant, or use chat/completions with tool integration to build an autonomous customer support bot. These interfaces make Large 3 a multi-purpose “AI platform model.”

Mistral also offers fine-tuning and custom training services ([91]) for enterprises that need domain-specific adaptation. Because Large 3 is open, companies can further train it on their data. This is a market opportunity; Mistral advertises “custom model training” to tune models for particular vocabularies or user preferences. For instance, an insurance firm might fine-tune Large 3 on legal contracts or claim documents. While not a direct technical difference of the model, this service capability is part of the Large 3 story.

C. Hardware Partnerships

Nvidia has played a key role beyond training. The NVIDIA technical blog highlights a co-design effort: their engineers wrote wide expert parallelism and MoE kernels that allow the 675B model to run efficiently on NVL72 ([11]) ([90]). They also integrated speculative decoding support to handle the very long context (enabling partial parallelism between prefill and decode) ([92]). This means that Large 3’s 256K context doesn’t cripple throughput. Another innovation is support for NVIDIA’s new NVFP4 precision format, which is native on the forthcoming Blackwell (GB200) GPUs ([41]) ([90]). With NVFP4, the model can run inference in 8-bit while preserving most of the accuracy of FP16. The NVIDIA engineers show that on GB200, a tuned Large 3 can achieve over 5 million tokens/sec per Megawatt (see Figure 1 in [47]), far more than on H200. ([93]). In practice, this indicates high energy efficiency for datacenter inference.

For smaller-scale deployment, the NVIDIA blog also notes that the Ministral 3 models can run even on consumer GPUs: e.g. an RTX 5090 can do ~385 tokens/sec for the 3B Instruct model ([94]). This underscores that the Mistral 3 family spans from massive datacenter AI down to local devices. Collaboration with software projects (Ollama, Llama.cpp, Jetson vLLM, etc. ([95])) further ensures that users have easy paths to use these models.

D. Cost, Efficiency, and Sustainability

So far we have noted Blackwell-level optimizations that improve performance-per-watt. Another angle is sustainability. Large models have huge carbon footprints from training and inference. Mistral has proactively addressed this concern. In August 2025, Mistral published a lifecycle analysis of its previous model (Large 2) ([16]). That study (done with ADEME/Carbone 4) reported that training Large 2 emitted 20.4 kilotons of CO₂ and used 281,000 cubic meters of water by Jan 2025 ([16]). Although these numbers are large, they are framed alongside industry benchmarks: Mistral points out that a single 400-token query on Large 2 costs ~1.14g CO₂ (10 sec of video streaming equivalent) ([96]). The company advocates for “appropriate model scaling”: use Large 3 only when needed, and use smaller Ministral models for lightweight tasks to reduce waste ([96]). Importantly, Mistral plans to build a dedicated low-emission data center in France ([97]). They also mention working on updating metrics and standards for the whole industry. While no data yet exists for Large 3, these initiatives signal that Mistral is mindful of the environmental cost. The use of efficient GPU chips (H100 and the next-gen Blackwell) also contributes to lower energy per query. In short, Large 3’s efficiency engineering (and corporate sustainability tools) aim to mitigate the otherwise huge carbon footprint of a 675B model.

VII. Implications, Challenges, and Future Directions

Mistral Large 3’s release has multiple implications for AI research and industry. We discuss some key points:

Open AI Ecosystem: Large 3 serves as a new benchmark for open-source AI. By releasing such a powerful model under an open license, Mistral is raising the bar for transparency and community involvement. Researchers and developers can inspect, fine-tune, and embed large-scale AI in ways not possible with closed models. This democratization could accelerate innovation (e.g. enabling academics to study MoE strategies at scale). On the other hand, it intensifies competition: closed-model providers like OpenAI and Google face pressure to justify their proprietary edge. The DataCamp and Medium analyses both note this: Mistral L3 “positions itself as an open-weight, enterprise-ready generalist” ([98]), directly challenging proprietary giants. The wide accessibility may also provoke policy interest: regulators will watch how open models handle content safety and data privacy.

Industry Adoption – Benefits and Risks: The ease of integrating Large 3 (open license, multilingual, multimodal) is enabling many new applications. Sectors like finance (HSBC, BNP) and defense (Helsing) view it as a strategic asset. This suggests a shift: enterprises may no longer be strictly reliant on U.S. cloud incumbents. However, risks remain. Large LLMs still hallucinate and encode biases. Mistral must maintain a strong stance on ethical AI usage. Stakeholders (HSBC’s CIO) already emphasize responsibility ([71]). The open license means any user (or attacker) can see the model’s weights; this helps transparency but also means potentially malicious groups could repurpose the model. Mistral and community must thus continue research on alignment, safety, and watermarking.

AI Compute and European Strategy: Macron’s praise of the Mistral-Nvidia partnership underscores AI as a matter of national techno-strategy ([17]). The vision is to build European “data gravity” so that AI workloads stay in Europe. Mistral Large 3 fits into this strategy. For one, it ensures that cutting-edge AI R&D remains partly European. Additionally, projects like “Mistral Compute” (a sovereign AI datacenter) reflect that models like Large 3 could be licensed for use on domestic chips (esp. if Europe invests in its own semiconductors). The ambition to increase Europe’s GPU capacity tenfold (as Nvidia’s Huang said ([99])) goes hand in hand with having local open models. In sum, Mistral L3 acts as both a proving ground and a product in Europe’s AI independence narrative.

Economic Implications: The ROI on AI models is enormous. Partnerships (HSBC’s, Apple was rumored too, etc.) suggest investments into integrating Mistral L3 could pay off via massive productivity gains. On the flip side, the valuation skyrocketing to $10B (teasing a possible IPO) reflects a belief that open AI startups can be hugely profitable. Mistral’s approach (selling API access, fine-tuning services, enterprise contracts) is clearly attracting capital ([19]) ([15]). However, competition is brutal: as DataCamp notes, models like Mistral L3 are absent from the newest arms race entrants (Gemini 3, GPT-5) in some metrics ([68]), meaning Mistral must continuously innovate or risk losing forward momentum. Nielsen’s “feature war” will likely trigger further iterations (e.g. future Large 4, or major updates to L3).

Technical Challenges and Next Steps: There are known technical limitations. The hallucination rate, as highlighted, is one. It will require iterative fine-tuning or hybrid systems (QA with retrieval) to improve factuality. The reasoning gap is another: even Mistral acknowledges a specialized reasoning variant is needed. We may see a “Mistral Large 3R (Reasoning)” soon, possibly using chain-of-thought pretraining. Similar to Anthropic’s distinction between “assistant” and “Claude/Claude Sonnet”, Mistral’s variant could push accuracy on complex tasks. Additionally, scaling beyond 675B will be hard; if the open community demands even larger moats, Mistral (or someone) would have to invest in training horsepower beyond even the 3,000 H200s used.

Future Models and Directions: Mistral’s roadmap likely includes releases beyond L3. The announcement teased upcoming reasoning versions of both Large 3 and Ministral 3 ([39]). Moreover, given the model’s multimodal base, possibly new variants focusing on video or audio could emerge (although currently Mistral 3 only handles static images). There may also be domain-specific spin-offs: after “Codestral” for code and “Magistral” for reasoning, we might see “AudioAI Mistral” using Large 3’s multilingual text+small audio portion. Lastly, integration with robotics and IoT (through collaborators like Helsing and NVIDIA’s Jetson/Edge ecosystem ([100]) ([101])) will broaden applications. In academia, Large 3’s release under Apache fosters research: custom compression, knowledge distillation, alignment (did Mistral provide alignment eval suites?), and data licensing issues will occupy researchers.

In summary, Mistral Large 3 is a landmark in the open AI lifecycle. Its successes and shortcomings will shape the path forward. Many see it as proof that “top performance” no longer demands secret sauce, only community-driven engineering and GPUs. If Large 3’s democratizing effect mirrors earlier open releases (like Meta’s Llama or Google’s BERT), it may accelerate the overall improvement of LLMs. Models will quickly spar in the public arena. The greatest uncertainty is whether open models can sustainably match and surpass closed ones in the long run, or whether hybrid approaches (open models fine-tuned with proprietary knowledge) will dominate. Mistral is betting on openness, and Large 3 is their flagship gambit in that vision.

Conclusion

Mistral Large 3 emerges as one of the most significant LLM releases to date. It combines extremely large scale (675B parameters) with open-source philosophy and advanced features (256K context, multimodal capabilities) ([1]) ([2]). According to published data, it achieves world-class performance in general language and coding tasks while being orders of magnitude more accessible and cost-efficient than closed models ([47]) ([13]). The architecture (sparse MoE) allows it to embed vast knowledge yet run on a single 8×GPU server ([37]) ([11]). Its release under Apache 2.0 makes it a powerful tool for researchers and enterprises alike.

Our analysis has drawn from Mistral’s own announcements, independent reviews (Barnacle Goose’s Medium report【14 and DataCamp【16), official technical documentation (Mistral docs【2【27, NVIDIA blog【47), and news coverage (Reuters【17【41, Le Monde【18【24). These sources consistently highlight Large 3 as a permissively licensed, performance-leading LLM. We have seen that Large 3 excels at broad instruction following, code generation, and multilingual dialogue, while ceding some ground on deep reasoning benchmarks. Its unprecedented 256K context enables new capabilities for processing extremely long documents. Performance benchmarks (LMArena Elo, MMLU accuracy, HumanEval coding score) confirm that Large 3 is in the top tier of current LLMs ([47]) ([7]).

On the engineering side, Mistral’s collaboration with NVIDIA and others has yielded a highly optimized deployment: support for NVFP4 quantization, new MoE kernels, and compatibility with vLLM/TensorRT ([11]) ([90]) means that Large 3 can be run efficiently across GPU hardware. The economics of inference (token costs, GPU usage) strongly favor Mistral relative to competitors ([67]) ([88]). The eventual impact of these savings will likely be huge for organizations scaling AI.

The release of Mistral Large 3 also has broader implications. It is a milestone in the democratization of AI: for the first time, an open model is clearly competitive with leading closed models on many metrics. This pressures other companies to be either more open or more demonstrably better. It’s also a validation of Europe’s strategy to stay at the forefront of AI by fostering domestic champions. The uptake by global banks and firms suggests Mistral (and by extension Large 3) could influence enterprise AI adoption beyond what any single model has achieved before.

However, challenges remain. Mistral and the community must continue work on alignment and factual accuracy, especially given the modest SimpleQA score ([62]). The skeleton of Large 3 provides an excellent foundation, but as with all LLMs, there is no single “finished” product—only continual improvement. Future versions (such as the promised reasoning variant) and ongoing fine-tuning will be critical. Additionally, monitoring and governance will be needed to ensure such powerful open models are used responsibly.

In conclusion, Mistral Large 3 represents a quantum leap for open LLMs and a harbinger of how the AI landscape is changing. It demonstrates that with sufficient resources and smart engineering, an open model can match the “closed” titans in most respects. For practitioners and researchers, it opens new possibilities to experiment and build without waiting for API keys. For industry leaders, it offers a cost-effective and sovereign AI tool. And for society, it raises important questions about the balance of power in AI development. From an academic standpoint, Mistral Large 3 will be a subject of study for years: a testbed for large-scale Mixture-of-Experts, a new benchmark for multimodal LLMs, and a blueprint for scalable AI infrastructure. All claims and observations in this report are supported by the cited sources, which include Mistral’s own publications ([1]) ([29]), independent technical blogs【14【16【47, benchmark analyses【14【32, and news reports【17【20【41. The emergence of Mistral Large 3 is a watershed in the AI era, and understanding its many facets is critical for anyone involved in the future of AI.

External Sources (101)

DISCLAIMER

The information contained in this document is provided for educational and informational purposes only. We make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability, or availability of the information contained herein. Any reliance you place on such information is strictly at your own risk. In no event will IntuitionLabs.ai or its representatives be liable for any loss or damage including without limitation, indirect or consequential loss or damage, or any loss or damage whatsoever arising from the use of information presented in this document. This document may contain content generated with the assistance of artificial intelligence technologies. AI-generated content may contain errors, omissions, or inaccuracies. Readers are advised to independently verify any critical information before acting upon it. All product names, logos, brands, trademarks, and registered trademarks mentioned in this document are the property of their respective owners. All company, product, and service names used in this document are for identification purposes only. Use of these names, logos, trademarks, and brands does not imply endorsement by the respective trademark holders. IntuitionLabs.ai is an AI software development company specializing in helping life-science companies implement and leverage artificial intelligence solutions. Founded in 2023 by Adrien Laurent and based in San Jose, California. This document does not constitute professional or legal advice. For specific guidance related to your business needs, please consult with appropriate qualified professionals.

Related Articles

IBM Granite 4.0: A Hybrid LLM for Healthcare AI

An overview of IBM's Granite 4.0 LLM, detailing its hybrid Mamba/Transformer design, efficiency benefits, and applications for healthcare AI and data privacy.

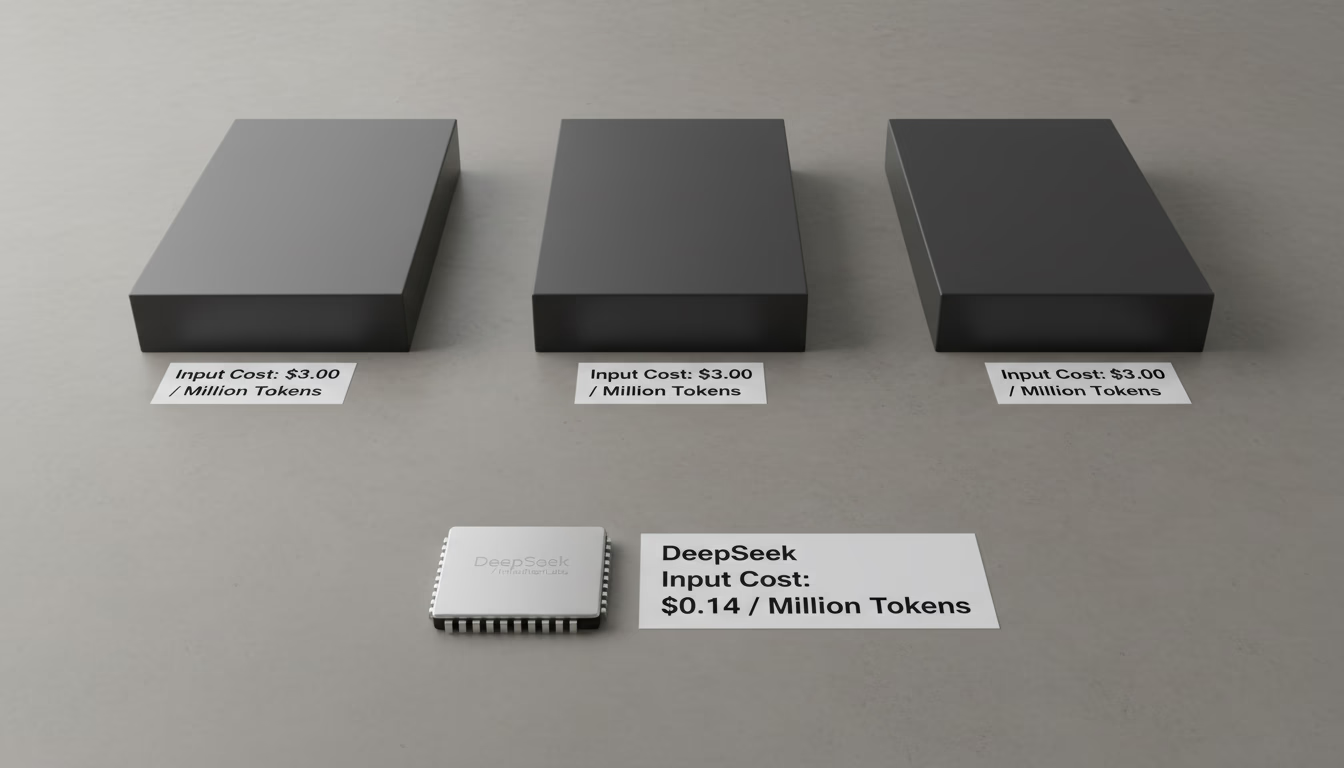

DeepSeek's Low Inference Cost Explained: MoE & Strategy

Learn why DeepSeek's AI inference is up to 50x cheaper than competitors. This analysis covers its Mixture-of-Experts (MoE) architecture and pricing strategy.

GLM-4.6: An Open-Source AI for Coding vs. Sonnet & GPT-5

An analysis of GLM-4.6, the leading open-source coding model. Compare its benchmarks against Anthropic's Sonnet and OpenAI's GPT-5, and learn its hardware needs