GLM-4.6: An Open-Source AI for Coding vs. Sonnet & GPT-5

[Revised February 26, 2026]

Executive Summary

The GLM-4.6 model (General Language Model, version 4.6) is an open-source AI system developed by Zhipu AI (now Z.ai) that specifically targets complex reasoning and coding tasks. With 355 billion parameters (32 billion active) and a 200K-token context window, GLM-4.6 represented a major advance over earlier open models when it launched in September 2025. Zhipu AI reported that GLM-4.6 outperformed its predecessor GLM-4.5 on numerous benchmarks and achieved ”state-of-the-art performance among open source models” ([1]). In practical coding evaluations, GLM-4.6 reached near parity with Anthropic’s Claude Sonnet 4 – human evaluators judged it about 48.6% as good as Sonnet 4 at real-world coding tasks ([2]).

Since this article was originally published in October 2025, the coding AI landscape has shifted dramatically. Anthropic released Claude Opus 4.6 (February 5, 2026) and Claude Sonnet 4.6 (February 17, 2026), with Opus 4.6 achieving 80.8% on SWE-Bench Verified and Sonnet 4.6 close behind at 79.6% ([3]). OpenAI shipped GPT-5 (August 2025, scoring 74.9% on SWE-Bench), followed by GPT-5.2 (December 2025, 80.0%) and GPT-5.3-Codex (February 2026), which set new records on SWE-Bench Pro and Terminal-Bench ([4]). Meanwhile, Z.ai itself released GLM-5 on February 11, 2026 — a 745B-parameter (44B active) MoE model scoring 77.8% on SWE-Bench Verified, surpassing GLM-4.6 and competing with the best proprietary models ([5]). Open-source competitors have also surged: DeepSeek V3.2-Speciale reached 73.1% on SWE-Bench and Qwen3-Coder achieved 70.6%.

Thus, while GLM-4.6 was the leading open-source coding model in late 2025, the field has moved rapidly. GLM-5 now carries the open-source torch, while Claude Opus 4.6 and GPT-5.3-Codex vie for the overall top spot among proprietary systems. This report reviews GLM-4.6’s architecture, benchmarks, and real-world usage — with updated context reflecting the rapid evolution through early 2026.

Running GLM-4.6 effectively requires cutting-edge hardware. Published deployment guides indicate GLM-4.5 (its predecessor) needed on the order of 16–32 NVIDIA H100 GPUs (or equivalent) even for inference ([6]). GLM-4.6’s larger context likely demands roughly twice that. In practice, this means multi-GPU server clusters (with >>1 TB system RAM) or specialized superchips (e.g. NVIDIA’s Hopper/Grace architecture) for real-time use. For reference, Zhipu’s documentation shows GLM-4.5 needs 16×80GB H100 GPUs for BF16 inference at 128K context ([6]). One can roughly extrapolate that GLM-4.6 at 200K context might require on the order of 32+ such GPUs. At present, GLM-4.6 is primarily run via cloud/AIs-as-a-service (Novita AI, Z.ai API) where the provider bulks together this hardware.

This report provides a comprehensive review of GLM-4.6: its architecture, training, performance benchmarks, and real-world usage. We compare it in detail to Anthropic’s Claude Sonnet models and to the expected GPT-5. We analyze quantitative results on coding and reasoning tasks, examine hardware requirements, present case studies of GLM-4.6 in use, and discuss broader implications. Throughout, claims are supported by extensive citations to technical blogs, news reports, and official documentation.

Introduction and Background

The Rise of AI-Assisted Coding

AI-driven code generation and debugging have become a central focus of recent AI research. Models like OpenAI’s Codex and Google DeepMind’s AlphaCode showed in 2021–2022 that large language models (LLMs) can tackle programming problems. In practice, tools such as GitHub Copilot (based on Codex) have begun assisting millions of developers. As coding is a concrete, high-stakes task requiring logical reasoning, coding models are often seen as a litmus test for AI progress: they must handle precise, multi-step logic that traditional LLM conversations might not. Many experts believe advances in AI coding assistants are crucial stepping stones toward more general AI systems.

By mid-2025, a new wave of coding AI “arms race” emerged. Major players include Anthropic (the Claude brand, including the Sonnet and Opus model families), OpenAI (the GPT-5 series and Codex variants), and in China, companies like Zhipu AI (GLM series) and DeepSeek (V series). These models advertise specialized “agentic” and “reasoning” modes to handle complex tasks beyond mere text completion. By early 2026, open-source models have substantially narrowed the gap with proprietary systems: GLM-5 scores 77.8% on SWE-Bench Verified, DeepSeek V3.2-Speciale reaches 73.1%, and Qwen3-Coder hits 70.6%. GLM-4.6 emerged in this context as a flagship open model aiming squarely at coding and multi-step reasoning, paving the way for GLM-5’s even stronger results.

GLM Series and Zhipu AI

Zhipu AI, now known as Z.ai, is a Chinese AI startup founded in 2019 that quickly became one of China’s “AI Tigers” for LLM development ([7]). It has been positioned as a response to Western LLM leaders. The core of its technology is the GLM (“General Language Model”) series. GLM models have historically focused on Chinese-language tasks — for example, GLM-130B (released in 2023) scored very highly on Chinese comprehension benchmarks ([8]).

Over 2024–25, Zhipu AI released increasingly capable GLM models. GLM-4.5 (July 2025) introduced “hybrid reasoning” modes and MoE architecture as the company’s first focus on coding and agent tasks. It leveraged a Mixture-of-Experts design to pack 355B parameters (with only 32B active at any time) into the model ([9]). This allowed very high capacity while containing compute costs. By September 2025, Zhipu unveiled GLM-4.6. This new version retains the 355B/32B MoE architecture but expands the context window from 128K to 200K tokens ([10]). It additionally refines training with more code data and new “thinking mode” switches for deep reasoning ([10]). As a result, GLM-4.6 shows markedly better performance than 4.5 on coding and reasoning benchmarks ([11]) ([1]).

Crucially, GLM-4.x models are open source. Zhipu released GLM-4.5 and its smaller variant GLM-4.5-Air under permissive licenses (MIT/Apache) and published weights and code ([12]) ([13]). GLM-4.6 likewise has its base and chat weights publicly available on HuggingFace and other platforms ([14]). This contrasts with Anthropic and OpenAI, which keep their flagship models proprietary. Thus GLM-4.6 has democratized access: anyone can download and run it (given sufficient hardware) or try it via free tiers on services like Novita.ai.

Sonnet (Anthropic Claude) Models

Anthropic’s Claude has emerged as the main Western rival. In 2024–2026, Anthropic released a series of progressively more powerful models. They branded their coding-focused variants as Claude Sonnet, with Opus as the flagship reasoning tier. Sonnet models have hybrid “chain-of-thought” capabilities engineered for long-horizon tasks. Most notably:

-

Sonnet 4 (May 2025) introduced significant coding performance improvements, with a 200K context window ([15]) (matching GLM) and integrated tool use. Anthropic touted it as offering ”frontier performance” for coding and agents ([16]).

-

Sonnet 4.5 (Sept 2025) delivered further gains. Anthropic announced that Sonnet 4.5 achieved 77.2% on SWE-Bench Verified, at the time the highest ever on this real-world coding benchmark ([17]). They claimed it was ”the best model in the world for agents, coding, and computer use” ([18]). Sonnet 4.5 can carry out multi-day coding projects without losing context (“30+ hours” workflows) ([19]). It also introduced features like native code execution, extended memory, and VS Code integration ([20]).

-

Opus 4.6 (February 5, 2026) and Sonnet 4.6 (February 17, 2026) represent the latest generation. Opus 4.6 achieves 80.8% on SWE-Bench Verified, while Sonnet 4.6 scores 79.6% — delivering near-Opus performance at Sonnet pricing ($3/$15 per million tokens) ([3]). Both models feature a 1M-token context window (in beta), a massive expansion from the 200K of earlier generations ([21]). On agentic computer use (OSWorld-Verified), Sonnet 4.6 scores 72.5%, essentially tied with Opus 4.6’s 72.7%.

These Claude models are cloud-only (proprietary) and primarily targeted at enterprise dev environments. Anthropic’s goals for Sonnet and Opus are to enable truly autonomous coding agents. The 80.8% SWE-bench performance of Opus 4.6 stands as one of the highest scores achieved by any model. The open-source gap has narrowed significantly, however, with GLM-5 at 77.8% and DeepSeek V3.2-Speciale at 73.1%.

GPT-5 and the OpenAI Roadmap

OpenAI released GPT-5 on August 7, 2025 ([22]), fulfilling the promise Sam Altman had made earlier that year. GPT-5 unified OpenAI’s scattered model lineup into a single architecture, merging multimodal capabilities, chain-of-thought reasoning, and persistent memory into one system. Key features delivered with GPT-5 include:

- Integrated Chain-of-Thought: Deep reasoning built in natively rather than as a separate “o-series” model.

- Persistent Memory: Long-term, cross-session memory enabling continuity across conversations.

- Multimodal & Video: Advanced image/video understanding and generation.

- Smart Routing: Automatic task routing across internal specialized sub-systems.

On coding benchmarks, GPT-5 scored 74.9% on SWE-Bench Verified at launch — a significant improvement over GPT-4’s ~52% but trailing Claude Sonnet 4.5’s 77.2% at the time. OpenAI rapidly iterated: GPT-5.2 (December 11, 2025) pushed the SWE-Bench score to 80.0%, temporarily matching the best proprietary models ([23]). Most recently, GPT-5.3-Codex (February 2, 2026) set new records on SWE-Bench Pro (56.8%) and Terminal-Bench 2.0 (77.3%), with 25% faster inference and stronger agentic computer-use capabilities ([4]). GPT-5.3-Codex is available across all Codex surfaces (app, CLI, IDE extension, web) for paid ChatGPT users.

Like previous GPTs, all GPT-5 variants remain closed-source and accessible only via API or subscription. Given this context, GLM-4.6 occupied an interesting niche when it launched: it was the leading open-source solution for coding tasks in late 2025. By early 2026, however, its successor GLM-5 (77.8% SWE-Bench) now competes with GPT-5.2 and Claude Opus 4.6 at the frontier, while the open-source field — including DeepSeek and Qwen — has strengthened considerably. The rest of this report examines GLM-4.6’s architecture and benchmarks in depth, then compares it against Sonnet and GPT-5.

GLM-4.6: Architecture and Innovations

Evaluating AI for your business?

Our team helps companies navigate AI strategy, model selection, and implementation.

Get a Free Strategy CallCore Architecture

GLM-4.6 is built on Zhipu AI’s “GLM-4.x” foundation. It is a Mixture-of-Experts (MoE) Transformer, totaling 355 billion parameters. Critically, it uses MoE sparsity: only about 32 billion parameters are active on any given forward pass, yielding considerable efficiency ([9]). This design lets the model scale up massively without prohibitive compute on each token. The model uses grouped query attention (MQA) and a large number of attention heads (96 heads) for deeper context interaction ([6]).

A key innovation in GLM-4.6 is the 200K-token context window ([10]). This is one of the largest contexts of any publicly known model, enabling the AI to ingest entire codebases or long documents at once. GLM-4.5 had 128K; GLM-4.6 boosted this further. Longer context directly addresses coding scenarios where projects span many files or discussions. In practice, developers can ask GLM-4.6 about a multi-hundred-page spec or a large code repository in one go. (By contrast, GPT-4 is limited to 32K, and even Claude Sonnet 4.5 is quoted with 64K output, though its context is 200K ([24]).)

GLM-4.6’s “Hybrid Reasoning” mode is another distinctive feature. Like GLM-4.5, it has two modes of operation: a fast “non-thinking” mode for simple queries, and a slower “thinking” mode for complex, multi-step reasoning ([12]). This toggle can be controlled via a parameter (e.g. thinking.type). In thinking mode, the model deliberately slows down its output, internally using more computation (multi-token lookahead with a “Multi-Token Prediction” head) to plan multi-step solutions ([6]). This resembles Anthropic’s approach in Claude (chain-of-thought introspection made explicit) and ensures the model doesn’t simply spill tokens but can reflect on a plan. The GLM-4.6 doings these rigorous steps to tackle logic puzzles, multi-part code generation, or extended agent interactions.

Training Data and Process

According to Zhipu’s documentation, GLM-4.5 (and by extension 4.6) received extremely large-scale training. GLM-4.5 was pretrained on about 15 trillion tokens of broad-domain data, then fine-tuned on an extra 7 trillion tokens of data focused on code and reasoning tasks ([25]). Zhipu also applied reinforcement learning (“{slime} engine”) to align GLM-4.5 with human preferences and strengthen coding/ reasoning ability ([25]). We can infer GLM-4.6 used a similar pipeline, likely adding even more diverse code-related examples and RL training focusing on multi-turn coding use cases. The model’s explicit support for "tool use during inference" suggests that some RL tasks involved integrating AWS APIs, search tools, or code compilers to train the agentic behavior ([26]).

Notably, the GLM-4 series emphasizes China-specific content in training. Zhipu’s earlier GLMs showed superlative performance on Chinese benchmarks ([27]). For GLM-4.6, publicly released artifacts and API examples are bilingual, but it likely has extra Chinese-language training, complementary to the mostly English code benchmarks like SWE-Bench. This broad training yields the model both fluency in Chinese (common in Zhipu’s user base) and strong coding ability from global coding corpora.

Open-Source Availability

Unlike Anthropic and OpenAI, Zhipu AI open-sourced GLM-4.x. All GLM-4.5 variants and 4.6 base weights are released under permissive license. GLM-4.5 (and 4.5-Air) was published under an MIT license ([12]). GLM-4.6 is released under Apache 2.0 ([13]), also allowing commercial and research use. The base TPU and chat models for GLM-4.6 are downloadable from HuggingFace and ModelScope ([14]). This democratizes access: any organization or individual with adequate hardware can run GLM-4.6 locally or on their own servers.

Zhipu also published open-source code and tools to support GLM. For example, they provide recipes for SGLang, vLLM, and HuggingFace Transformers integration ([11]). This means users can deploy GLM-4.6 with popular LLM-serving frameworks (as opposed to it being locked behind a single API). For commercial crowd or small startups, this is huge: they can build GPT-like coding assistants without licensing fees to OpenAI or Anthropic. Novita AI and other platforms essentially act as managed services on GLM-4.6.

Update (February 2026): Since GLM-4.6’s release, Z.ai has continued expanding the lineup. In December 2025, the GLM-4.6V multimodal series launched, adding vision capabilities with a 128K context window and native function-calling for visual perception tasks ([28]). Then on February 11, 2026, Z.ai released GLM-5 — a 745B-parameter (44B active) MoE model that scored 77.8% on SWE-Bench Verified, approaching Claude Opus 4.6’s 80.8% ([5]). GLM-5 is also released under the MIT license at approximately $0.80 per million input tokens — about six times cheaper than comparable proprietary models.

Model Capabilities

According to benchmark reports, GLM-4.6 exhibits a strong overall capability profile. In general reasoning, it outperforms GLM-4.5, scoring higher on tasks like mathematics (MATH), commonsense (MMLU), and logical puzzles. In coding-specific tests, it likewise does better than GLM-4.5 but not quite as high as Sonnet 4.5. Zhipu’s own evaluation (eight public benchmarks) shows GLM-4.6 achieving “clear gains over GLM-4.5”, including on coding metrics where it narrowly trails the best models ([29]).

Zhipu claims that GLM-4.6 “achieves higher scores on code benchmarks” and in practical agent-based coding tasks, producing more polished components (e.g. nicer front-end pages) ([11]). In the CC-Bench real-world coding challenge (multi-turn tasks with human oversight), GLM-4.6 won about 48.6% of evaluations against Claude Sonnet 4, which is roughly “near parity” ([11]). This indicates that while GLM-4.6 is not identically powerful as Sonnet 4 in coding-specific contexts, it is not far behind – and significantly outperforms all other open-source alternatives on those tasks.

Other specialized aptitudes include multi-step reasoning and structured output. GLM-4.6 incorporates a “tool use during inference” ability, meaning it can call external functions or APIs when generating. This aligns it with others like Claude (tool-calling Claude Code) or OpenAI Codex (via plugins). When deployed in coding agents (Claude Code, Cline, Kilo Code, etc.), GLM-4.6 reportedly handles end-to-end development flows (designing algorithms, writing code, running tests) more robustly than GLM-4.5 ([11]) ([2]).

In terms of language and content style, GLM-4.6 has been fine-tuned for fluent writing and preferences. Zhipu notes it is better aligned to human style and more “natural” in role-play scenarios ([11]). This indicates they did additional reinforcement learning or supervised data to shape its conversational tone. (Anthropic’s Claude models have a similar emphasis on politeness and safety through RLHF.) In sum, GLM-4.6 aims to be a versatile general agent with special edges in code and reasoning, while still conversing clearly.

Comparison to Claude/Sonnet Models

Overview of Claude Sonnet 4 through 4.6

Anthropic’s Claude lineup is explicitly engineered toward coding and long-horizon reasoning. Key facts:

-

Claude Sonnet 4 (outright release May 2025): Hybrid model (likely over 100B parameters, internal architecture undisclosed). It has a 200K context window and advanced chain-of-thought prompting. Anthropic states Sonnet 4 “improves on Sonnet 3.7 especially in coding” and offers “frontier performance” for AI assistants ([16]). In practice, Sonnet 4 achieved roughly the top performance at its time on benchmarks like Terminal-Bench and coding tasks, surpassing GPT-4.

-

Claude Sonnet 4.5 (release Sep 29, 2025): Anthropic declared Sonnet 4.5 ”the best model in the world for agents, coding, and computer use” ([18]). It hit 77.2% on SWE-Bench Verified ([17]), at the time an unprecedented score, and jumped to 61.4% on OSWorld ([30]). Sonnet 4.5 can sustain multi-day coding projects (30+ hours) without context loss ([19]).

-

Claude Opus 4.6 (February 5, 2026) and Claude Sonnet 4.6 (February 17, 2026): The latest generation pushes the frontier further. Opus 4.6 scores 80.8% on SWE-Bench Verified, while Sonnet 4.6 achieves 79.6% — near-Opus performance at Sonnet-tier pricing ([3]). On OSWorld-Verified (agentic computer use), both score ~72.5–72.7%. The context window expanded to 1M tokens (in beta), a 5× increase over the 200K in earlier models ([21]).

Context length: Claude 4.6 models feature a 1M token context window (beta), dramatically exceeding GLM-4.6’s 200K. Earlier Sonnet 4 and 4.5 shared GLM-4.6’s 200K window.

Availability: Unlike open-source GLM, Claude models are proprietary and cloud-only. Sonnet 4.6 is accessible via Claude.ai web, Anthropic’s SDKs, and cloud platforms (AWS Bedrock, Google Vertex) ([31]). Pricing remains at about $3 input/$15 output per million tokens for Sonnet-tier models ([32]).

Architectural Differences: Details are scarce, but Claude models are not MoE. Claude has referenced a “Hybrid reasoning model” architecture that blends different layers (and likely heavy RLHF) ([15]). They also emphasize having a system prompt orchestrating “thinking” vs “speaking” modes. GLM’s MoE approach is a distinctly different technical path for maximizing parameters.

Benchmarks and Performance

When directly comparing GLM-4.6 and Claude Sonnet on benchmarks (as of the original October 2025 evaluation, with February 2026 updates in brackets):

-

Coding Tasks (SWE-bench, etc.): At launch, Sonnet 4.5 led with 77.2% on SWE-bench Verified ([17]), far above any open model. GLM-4.6 has no published SWE score, but Zhipu noted it “still lags behind Claude Sonnet 4.5 in coding ability” ([11]). In CC-Bench, GLM-4.6’s 48.6% win rate against Sonnet 4 means it lost slightly more than it won. [February 2026 update: The gap has narrowed significantly. GLM-5 now scores 77.8% on SWE-Bench Verified, while Claude Opus 4.6 leads at 80.8%, Sonnet 4.6 at 79.6%, and GPT-5.2 at 80.0%. DeepSeek V3.2-Speciale (73.1%) and Qwen3-Coder (70.6%) have also strengthened the open-source field.]

-

General Reasoning: Sonnet 4.5 reportedly improved on math and logic, but GLM-4.6 similarly boosts reasoning over GLM-4.5 ([33]). On benchmarks like MATH or MMLU, Sonnet 4.5 likely has the edge due to larger parameter count and more extensive fine-tuning on English data. However, GLM-4.6’s multi-layer, deep attention architecture assures it remains very competitive. In fact, Zhipu claims GLM-4.6 even surpasses Sonnet 4 on certain agentic benchmarks like BFCL-v3 ([11]). Precise head-to-head numbers are not public, but we can say performance is roughly comparable on reasoning when context length is accounted for.

-

Agentic and Tool Use: Both GLM-4.6 and Sonnet models integrate tool/model chaining. Anthropic’s Claude has the Agentic Claude framework and explicit support for function calls. GLM-4.6 supports similar “function calling” natively ([34]) and has been placed inside coding agents (Roo, Kilo, etc.). Zhipu reports GLM-4.6 shows stronger performance specifically in tool-using and search-based agents compared to GLM-4.5 ([11]). Anecdotally, GLM-based agents (via Claude Code or Novita) can navigate APIs and write correct code sets, though Sonnet’s longer sustained focus might give it a slight advantage on very extended agent workflows.

-

Content Quality: In non-code tasks like writing or role-play, GLM-4.6 is also strong but not specifically top-tier. Anthropic’s Claude systems are often praised for coherent tone and creativity. GLM-4.6 uses RL alignments for style, but it was optimized more for logic. Nevertheless, Zhipu notes GLM-4.6 outputs more “refined” writing and more human-aligned style ([11]). We lack a direct qualitative score to compare, but expert evaluations often find few cracks in modern models’ prose. One practical difference: GLM-4.6 may incorporate more Chinese cultural data than Claude, while Claude might have more training on diverse English codebases.

Summary of Comparison

Overall, the head-to-head comparison as of GLM-4.6’s launch can be summarized (with February 2026 updates noted):

-

Parameter and Architecture: GLM-4.6 (355B MoE open-source) vs. Sonnet 4.5 (unknown param, closed). Both have massive scale; Sonnet’s exact count isn’t public, but it likely exceeds 100B. Sonnet uses a dense Transformer with heavy RLHF, whereas GLM uses experts and deeper stacking. [GLM-5 now scales to 745B/44B active MoE.]

-

Context Window: GLM-4.6 and Sonnet 4/4.5 both offered ~200K tokens. [Claude 4.6 models now offer 1M tokens (beta), while GLM-5 retains 200K input / 128K output.]

-

Coding Performance: At launch, Sonnet 4.5 led (77.2% SWE-bench) vs. GLM-4.6 at lower (around Sonnet 4 level). GLM-4.6 outshone all other open models ([1]). [By February 2026: Opus 4.6 leads at 80.8%, GPT-5.2 at 80.0%, Sonnet 4.6 at 79.6%, GLM-5 at 77.8%, DeepSeek V3.2-Speciale at 73.1%.]

-

Open vs Closed: GLM-4.6 is MIT/Apache-licensed (developers can run it locally) ([13]). Sonnet and Opus remain proprietary (accessible only via Claude APIs) ([31]). [GLM-5 also uses MIT license. DeepSeek V3.2 uses MIT license.]

-

Availability: Sonnet 4.5 was in Claude web/API with developer SDK. GLM-4.6 is accessible via select services (Novita AI, Z.ai API) and downloadable for private use ([11]). [GLM-5 weights are available on HuggingFace (huggingface.co).]

-

Agentic Capabilities: Both target tool-learning. Sonnet 4.5 is optimized for user-facing agents (customer support, autonomous coding bots) ([35]). GLM-4.6 similarly targets agents and coding workflows ([36]). [GPT-5.3-Codex now leads on Terminal-Bench 2.0 (77.3%) for agentic execution.]

-

Special Features: Sonnet 4.5 adds dynamic “thinking” check-points, extensions (VS Code, Chrome plugin) and tightly integrated execution. GLM-4.6 has a unique integrated multi-token prefix cache (MTP) and explicit thinking mode switching. [GLM-5 introduces autonomous long-range planning and deep debugging capabilities.]

In short, GLM-4.6 was the strongest open-source code model at its launch, though Claude Sonnet 4.5 outperformed it. By early 2026, its successor GLM-5 has substantially closed the gap with proprietary leaders. For many users, the decision still hinges on openness and cost: GLM models can be self-hosted at a fraction of the price, whereas Claude and GPT-5 require paid API subscriptions.

Performance and Benchmarks

Industry Benchmarks

Both developers and independent evaluators usually benchmark coding models on standardized tasks. Relevant metrics include:

-

SWE-Bench Verified: A benchmark that tests fixing real GitHub issues. Sonnet 4.5 scored 77.2% on SWE-Bench Verified ([17]), substantially better than any published model. OpenAI’s GPT-4 had lower scores (around 50%), and GLM-4.x has not published a direct score.

-

OSWorld: AI’s ability to operate in a simulated OS environment. Sonnet 4.5 achieved 61.4% on OSWorld ([30]), up from 42.2% pre-upgrade. This demonstrates autonomous use of tools. No open-model comparison data is available for OSWorld, but GLM-4.x was optimized for tool use, so it likely performs well (GLM-4.5 topped earlier browsing benchmarks ([11])).

-

Agent Benchmarks: Y et another category is agentic benchmarks (things like web browsing tasks). Zhipu reports GLM-4.5 matched Claude Sonnet 4 on certain agent benchmarks (τ-bench, BFCL-v3) and even exceeded GPT-4 mini on web browsing (BrowseComp) ([11]). We expect GLM-4.6 to similarly be competitive in agent tasks. Anthropic has not published similar head-to-heads aside from shareholder docs, but Sonnet’s claim to be “best for agents” suggests top-tier results too.

-

General Reasoning: On academic tests (MATH, MMLU, etc.), GLM-4.5 scored mid-80s (e.g. MMLU-Pro: 84.6, MAT H500: 98.2) ([6]), reflecting strong base reasoning. Sonnet 4 likely scores higher but typically such benchmarks are all near ceiling (50–90% in these). These numbers suggest GLM-4.x is at least competitive with GPT-4-level reasoning.

To date, no single authoritative leaderboard includes all these new models. Anecdotally, GLM-4.5 was ranked 3rd across a dozen mixed benchmarks ([6]), behind only a couple proprietary giants. GLM-4.6’s improvements likely push it into the top 2 or 3 open models in aggregate performance.

Real-World Coding Tasks (CC-Bench)

Beyond static tests, the CC-Bench represents a real-world coding evaluation framework. In this test, human overseers give the model real coding tasks (front-end development, data analysis, testing, etc.) within an isolated environment. The latest CC-Bench results (with GLM-4.6 vs others) reveal:

-

GLM-4.6 achieved a 48.6% win rate against Claude Sonnet 4 in multi-turn scenarios ([11]). In these head-to-head trials, GLM-4.6 solved about half the tasks as well as Sonnet 4 (i.e. Sonnet 4 “won” 51.4% of the time). By contrast, GLM-4.5 (the previous generation) was clearly worse. This indicates GLM-4.6 has nearly closed the gap for practical development work.

-

Moreover, against all other open-source baselines (DeepSeek, K2, MoonMonkey etc.), GLM-4.6 “clearly outperformed” them in CC-Bench ([11]). This underscores that it is the new king of open code models.

-

In terms of token efficiency (a measure of how succinctly the model solves tasks), GLM-4.6 is about 15% more efficient than GLM-4.5 ([11]). This means it often generates shorter solutions, which yields faster execution and lower cost. (By contrast, no numbers are given for Sonnet on token use, but Sonnet’s sustained focus suggests it also solves problems with moderate succinctness.)

Coding Benchmark Scores

We compile some key figures in the table below:

| Model | SWE-Bench (Verified) | OSWorld | CC-Bench (win% vs others) | Remarks |

|---|---|---|---|---|

| Claude Opus 4.6 (Feb 2026) | 80.8% | 72.7% | – | Top proprietary model ([3]) |

| GPT-5.2 (Dec 2025) | 80.0% | – | – | Matched Opus 4.6 ([23]) |

| Claude Sonnet 4.6 (Feb 2026) | 79.6% | 72.5% | – | Near-Opus at Sonnet pricing ([3]) |

| GLM-5 (Feb 2026) | 77.8% | – | – | Leading open-source model ([5]) |

| Claude Sonnet 4.5 | 77.2% ([17]) | 61.4% | best-of-class at launch | “Best coding model” (Sep 2025) |

| GPT-5 (Aug 2025) | 74.9% | – | – | First unified GPT ([22]) |

| DeepSeek V3.2-Speciale | 73.1% | – | – | Open-source (MIT) |

| Qwen3-Coder | 70.6% | – | – | Open-source, 1M context |

| GLM-4.6 | – (not reported) | – | 48.6% win vs Sonnet 4 ([11]) | State-of-art open-source (Sep 2025) |

| GLM-4.5 | (aggregate score 63.2) | – | – | 3rd among models on mixed tasks |

| GPT-4 | ~52% | – | – | Superseded by GPT-5 |

Note: “–“ indicates unpublished. SWE-bench Verified and OSWorld are the most comparable quantitative metrics. CC-Bench figures come from Zhipu’s GLM-4.6 evaluation with human testers ([11]). Bold rows indicate models released since October 2025.

The table highlights that Claude Opus 4.6 and GPT-5.2 now lead on coding benchmarks (both ~80% on SWE-Bench), with GLM-5 (77.8%) as the strongest open-source contender. GLM-4.6’s near-equal performance vs Sonnet 4 in realistic tasks indicated industry-grade capability, and its successor GLM-5 has substantially closed the gap with proprietary leaders.

In summary, GLM-4.6 was demonstrably the best open model for coding when it launched. By early 2026, GLM-5, DeepSeek V3.2-Speciale, and Qwen3-Coder have all pushed open-source coding performance to new heights, while Claude Opus 4.6 and GPT-5.3-Codex represent the proprietary state of the art.

Hardware Requirements to Run GLM-4.6

Running a 355-billion-parameter model like GLM-4.6—especially with a 200K context window—requires substantial computational resources. Both training and inference are extremely hardware-intensive:

Training Hardware (Context)

-

While Zhipu AI has not fully detailed the training cluster, it is reasonable to assume thousands of top-tier GPUs were used over months. For instance, training GPT-4 famously used tens of thousands of Nvidia GPUs over months, and a similarly scaled Chinese cluster likely powered GLM-4.5/4.6. Given geopolitical constraints (U.S. chip export bans), Chinese companies often rely on domestic hardware or scarce Channel chips (e.g. Huawei, or a limited supply of NVIDIA via indirect channels). Thus, GLM-4.x training likely used clusters of 8,192+ GPUs (80GB-class) running continuously, akin to how DeepMind trained AlphaCode on thousands of TPUs.

-

The timing suggests Zhipu likely used NVIDIA H100 (or soon H200) GPUs. Reports noted “H100 x16 or H200 x8” configurations for inference; by analogy, training may have involved large pools of H100s (TensorCore performance is vital). Alternatively, China’s own Ascend or new AI chips (such as MiCS by Horizon or others) might have supplemented the cluster.

Inference (Running the Model)

For the end user or developer wanting to run inference on GLM-4.6, the requirements are very high. Going off the published guidelines for GLM-4.5 inference ([6]) (which is slightly smaller context), one can estimate the needs for 4.6:

-

GPUs: Zhipu provides recommended configurations for GLM-4.5 inference. For the full 128K context, GLM-4.5 needed 32 × NVIDIA H100 GPUs when using BF16 precision (16 × H100 for 128K context in partial modes) ([6]). Even the “smaller” GLM-4.5-Air (106B params) needed 4×H100 BF16 just for baseline. Extrapolating to GLM-4.6 (200K context), one likely needs on the order of 32–40 H100 GPUs (80GB each) for BF16 inference. Zhipu’s docs also mention HG200 (likely NVIDIA’s next-gen Hopper/Grace hybrid) which could halve the GPU count (e.g. 16×H200) ([6]). Running FP8 quantized weights can halve these numbers (16 H100s for GLM-4.5; so perhaps ~32×H100 FP8 for GLM-4.6).

-

GPU Memory: The context length is key. A 200K token context requires storing huge activation buffers. Even with 80GB VRAM cards, a single inference pass at 200K tokens likely spans multiple GPUs. The server memory should exceed 1 TB (as Zhipu recommends) to even load all weights and cache ([6]).

-

Cluster Setup: The official note says inference generally assumes no CPU offloading and uses frameworks like SGLang or vLLM which support model parallelism. Thus, running GLM-4.6 on-premise would require a multi-node, NVLink-connected cluster to allow sufficient memory and throughput. Each H100 has NVLink connectivity; expecting 16–32 of them suggests either a multi-node cluster or an enormous single node if supported.

-

Scalability: As MoE, GLM-4.x can distribute experts across devices. This means cross-GPU communication is heavy. NVIDIA NVLink or InfiniBand connections are needed to share activations between GPUs.

-

Alternative Hardware: Given the difficulty, some organizations might prefer upcoming hardware like NVIDIA’s GH200 (Grace Hopper superchip) which pairs GPUs and CPUs closely. The table above shows Zhipu’s tests with H200 (Aurora/Grace) chips at half the GPU count. If available, GH200 clusters could run GLM models more efficiently. Ascend 910 or newer Chinese AI chips could in theory serve as well, but support for FP8/training might be lacking.

Below is a summary of the published inference requirements for GLM-4.5 (which are the best concrete data we have). These can be taken as a baseline for GLM-4.6 hardware planning:

| Model/Config | Precision | NVIDIA H100 GPUs | NVIDIA H200 GPUs | Context tokens |

|---|---|---|---|---|

| GLM-4.5 (baseline) | BF16 | 16 | 8 | 128K |

| GLM-4.5 (baseline) | FP8 | 8 | 4 | 128K |

| GLM-4.5-Air | BF16 | 4 | 2 | 128K |

| GLM-4.5-Air | FP8 | 2 | 1 | 128K |

| GLM-4.5 (full) | BF16 | 32 | 16 | 128K |

| GLM-4.5 (full) | FP8 | 16 | 8 | 128K |

Table: Inference hardware for GLM-4.5 (source: Zhipu AI docs ([6])). “Full” means configuration capable of utilizing the entire 128K context length. For GLM-4.6 (200K context), required GPUs would likely be approximately double.

For GLM-4.6, one must double or exceed these counts to cover 200K tokens. In practical terms, very few developers will run GLM-4.6 on bare metal; the model is predominantly accessed via SaaS platforms (Novita, Z.ai) where the provider amortizes the hardware costs. Nonetheless, deep-pocket organizations can self-host GLM-4.6 if they invest in a supercomputer-scale setup.

CPU/RAM: In addition to GPUs, serving GLM-4.6 needs terabytes of main memory. Zhipu suggests >1 TB of system RAM for any GLM-4.x setup ([6]). This is because full model weights (355B FP16 parameters ~0.7 TB) plus activation caches must fit in RAM if offloading is not used.

Comparison to Sonnet and GPT

Although not asked directly, it is noteworthy that Claude Sonnet 4/4.5 and GPT models also require enormous hardware, but Anthropic/OpenAI do not disclose their config. Given that Sonnet 4.5 is likely comparable or bigger than GLM-4.6, we estimate it similarly needs multi-GPU clusters for inference. Early public filings suggested Anthropic uses NVIDIA A100/H100 fleets to serve Claude. GPT-4 similarly needed many H100s. GPT-5, if to “unify everything”, could require thousands of GPUs to run full-capacity demos. But precisely, GLM-4.6’s open nature allows us to roughly quantify its own requirements as above.

Case Studies and Applications

Real-World Usage of GLM-4.6

Several platforms and organizations have started integrating GLM-4.6 into developer tools:

-

Novita AI Platform: Novita (a hong kong–based AI API provider) immediately added GLM-4.6 on release ([37]). They offer a playground where developers can interact with GLM-4.6, citing “200K Context, $0.6 per 1M in / $2.2 per 1M out” for usage ([38]). Novita’s blog explains that GLM-4.6 feeds richly into coding pipelines: users can prototype full-stack pages via prompts and tests on the cloud. Novita’s reported results (GLM-4.6 vs. Sonnet 4) give concrete figures: “48.6% win rate” in CC-Bench tasks ([2]).

-

Z.ai/Coding Agents: Zhipu’s own GLM Coding Plan (an API subscription) upgraded customers to GLM-4.6 automatically ([39]). This suggests many Chinese developers using Claude-like apps (Roo Code, Kilo Code, etc.) are now effectively using GLM-4.6 under the hood. Reports from Chinese tech press (e.g. SCMP) note that GLM-4.6 will be competing in marketplaces for coding tools alongside Sonnet and others ([40]).

-

Open-Source Projects: With weights public, open-source enthusiasts have begun porting GLM-4.6 to frameworks like FastChat, HuggingChat, and custom vLLM stacks. Though still early, community projects aim to integrate GLM-4.6 into chat UIs akin to ChatGPT. This is reminiscent of how local LLaMA clones spread, but GLM-4.6 may be harder to run due to size. Still, free initiatives like “ChatGLM-4” (Chinese community) are monitoring it.

-

Enterprise Experiments: Anecdotal evidence (developer blogs and forums) indicate Chinese enterprises testing GLM-4.6 for code review, documentation generation, and chatbots. Because of its open license, a bank or aerospace firm could run GLM-4.6 fully on-premise to analyze sensitive codebases without data leaving their servers. No public case studies have been published yet, but experts expect industries that already use GLM-3 or 4.5 to upgrade their internal deployments to 4.6.

Sonnet 4.5 in Practice

Anthropic and partners have also expanded Sonnet’s reach in late 2025:

-

IntelliCode and VS Code: Sonnet 4.5 plugins allow code completion, error checking, and generation directly in IDEs. Extensions in VS Code are now available for Sonnet 4.5, something GLM-4.6 as open source does not yet have a polished official extension ([41]). Nonetheless, GLM-4.6 is expected to work with similar plugins if integrated by third-parties.

-

Browser and Copilot: Microsoft has reportedly integrated Sonnet 4.5 into GitHub Copilot X (potentially “Grok 4” being Sonnet under the hood). Chat browsers and extensions for Sonnet 4.5 are in development. Most usage data is proprietary, but one can infer that high-end developers and Explorer/Edge Insiders are test-driving Sonnet in coding sessions.

-

Competitive Developer Reactions: Some public feedback hints that Sonnet 4.5’s coding suggestions are highly reliable (few bugs in generated code blocks) ([42]). For example, the The Neuron blogger noted Sonnet’s large code focus and extended reasoning. Our earlier cited LinkedIn account ([43]) (though anecdotal) illustrates how earlier Sonnet models handled large code files with artifacts. With Sonnet 4.5, those problems are significantly mitigated (the blogger’s issue of 2000-line truncation was reported fixed by 4.5).

GPT-5 and Its Rapid Evolution

GPT-5 launched on August 7, 2025, and has evolved rapidly through multiple iterations:

-

GPT-5 (August 2025): Scored 74.9% on SWE-Bench Verified, unified multimodal capabilities, and introduced persistent cross-session memory. Developers using GPT-4/4o in IDEs (via Copilot Chat, etc.) quickly migrated to GPT-5 ([22]).

-

GPT-5.2 (December 2025): Pushed SWE-Bench to 80.0% and scored 100% on AIME math competition, demonstrating dramatically improved reasoning. Available to ChatGPT Pro subscribers ([23]).

-

GPT-5.3-Codex (February 2026): OpenAI’s dedicated coding model achieved new records on SWE-Bench Pro (56.8%) and Terminal-Bench 2.0 (77.3%), with 25% faster inference. It supports interactive steering during long-running tasks and strong computer-use capabilities ([4]).

As predicted, GPT-5 automatically chains tasks internally — users can say “build a website”, and the model decides to use vision, code, and language modules as needed. This makes direct side-by-side comparisons with GLM/Claude more nuanced, as GPT-5 blurs the line between language, image, and code in one unified interface.

Case Study: Code Generation Scenario

As an illustrative example, consider a developer requesting a full-stack web feature:

“Build me an interactive dashboard that visualizes sales data, retrieving data from my SQL database via an API, and display it using Chart.js.”

An advanced coding AI must write front-end JavaScript, possibly set up a small backend endpoint, and ensure interactivity. In practice:

-

GLM-4.6 would use its 200K prompt to include any existing schema or partial code, then generate JS/HTML/CSS and perhaps a simple Node.js script to fetch data. It might call tools to validate syntax. Human tests (CC-Bench) show GLM-4.6 can complete multi-turn tasks like this somewhat efficiently (though sometimes requiring steering by the user).

-

Sonnet 4.5 likely excels here: based on its Capabilities, it would plan out the components (possibly even splitting into multiple files via “thinking mode”), ensure compatibility between front/back, and refine until success. Many developers report Sonnet rarely needs corrections on such tasks.

-

GPT-5.3-Codex handles such tasks through its unified multimodal pipeline, potentially generating design mockups alongside code, and supports interactive steering during execution ([4]).

This scenario underscores GLM-4.6’s real-world utility: it can handle complex, multi-file coding tasks end-to-end, and does so as well as any open model. Its successor GLM-5 further improves on these capabilities with autonomous long-range planning and deep debugging.

Implications and Future Directions

Open-Source vs Proprietary Debate

The GLM-4.6 release intensified the debate over open vs closed AI models, and by early 2026 this debate has reached a new inflection point. On one hand, proprietary models (Claude Opus 4.6, GPT-5.3-Codex) still hold the top spots on coding benchmarks (~80% SWE-Bench). On the other hand, open-source models have closed the gap dramatically: GLM-5 at 77.8%, DeepSeek V3.2-Speciale at 73.1%, and Qwen3-Coder at 70.6% mean the performance delta between open and closed is now just a few percentage points — a stark contrast to the 20+ point gap that existed just a year earlier.

For enterprises, the calculus has shifted. Running GLM-5 on-premise at $0.80 per million input tokens offers roughly 6× cost savings over comparable proprietary APIs, with the added benefit of data sovereignty. Organizations handling sensitive codebases (financial, healthcare, defense) can run frontier-class models without data leaving their infrastructure.

The Road Ahead

The rapid pace of iteration — from GLM-4.6 (September 2025) to GLM-5 (February 2026), and from GPT-5 (August 2025) to GPT-5.3-Codex (February 2026) — suggests the coding AI landscape will continue evolving at breakneck speed. Key trends to watch:

- Agentic engineering: Models are transitioning from "write code" to "engineer systems" — autonomously planning, debugging, and refactoring across entire codebases. GLM-5 and GPT-5.3-Codex both emphasize this direction.

- Longer contexts: Claude 4.6's 1M-token context window (beta) and Qwen3-Coder's 1M context indicate that entire large repositories will soon fit within a single prompt.

- Open-source parity: With GLM-5 within 3 points of the best proprietary models on SWE-Bench, the open-source community is approaching practical parity for most coding tasks.

- Specialization vs unification: OpenAI's Codex-specific variants versus Z.ai's general-purpose GLM-5 represent two philosophies. It remains to be seen which approach yields better results for real-world software engineering.

GLM-4.6 played a pivotal role in demonstrating that open-source models could compete at the frontier of coding AI. Its successor GLM-5, along with DeepSeek and Qwen, have cemented this trajectory. For developers and enterprises choosing a coding AI in 2026, the question is no longer "can open-source compete?" but rather "which combination of open and proprietary tools best fits my workflow?"

External Sources (43)

Get a Free AI Cost Estimate

Tell us about your use case and we'll provide a personalized cost analysis.

Ready to implement AI at scale?

From proof-of-concept to production, we help enterprises deploy AI solutions that deliver measurable ROI.

Book a Free ConsultationHow We Can Help

IntuitionLabs helps companies implement AI solutions that deliver real business value.

AI Strategy Consulting

Navigate model selection, cost optimization, and build-vs-buy decisions with expert guidance tailored to your industry.

Custom AI Development

Purpose-built AI agents, RAG pipelines, and LLM integrations designed for your specific workflows and data.

AI Integration & Deployment

Production-ready AI systems with monitoring, guardrails, and seamless integration into your existing tech stack.

DISCLAIMER

The information contained in this document is provided for educational and informational purposes only. We make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability, or availability of the information contained herein. Any reliance you place on such information is strictly at your own risk. In no event will IntuitionLabs.ai or its representatives be liable for any loss or damage including without limitation, indirect or consequential loss or damage, or any loss or damage whatsoever arising from the use of information presented in this document. This document may contain content generated with the assistance of artificial intelligence technologies. AI-generated content may contain errors, omissions, or inaccuracies. Readers are advised to independently verify any critical information before acting upon it. All product names, logos, brands, trademarks, and registered trademarks mentioned in this document are the property of their respective owners. All company, product, and service names used in this document are for identification purposes only. Use of these names, logos, trademarks, and brands does not imply endorsement by the respective trademark holders. IntuitionLabs.ai is an AI software development company specializing in helping life-science companies implement and leverage artificial intelligence solutions. Founded in 2023 by Adrien Laurent and based in San Jose, California. This document does not constitute professional or legal advice. For specific guidance related to your business needs, please consult with appropriate qualified professionals.

Related Articles

Mistral Large 3: An Open-Source MoE LLM Explained

An in-depth guide to Mistral Large 3, the open-source MoE LLM. Learn about its architecture, 675B parameters, 256k context window, and benchmark performance.

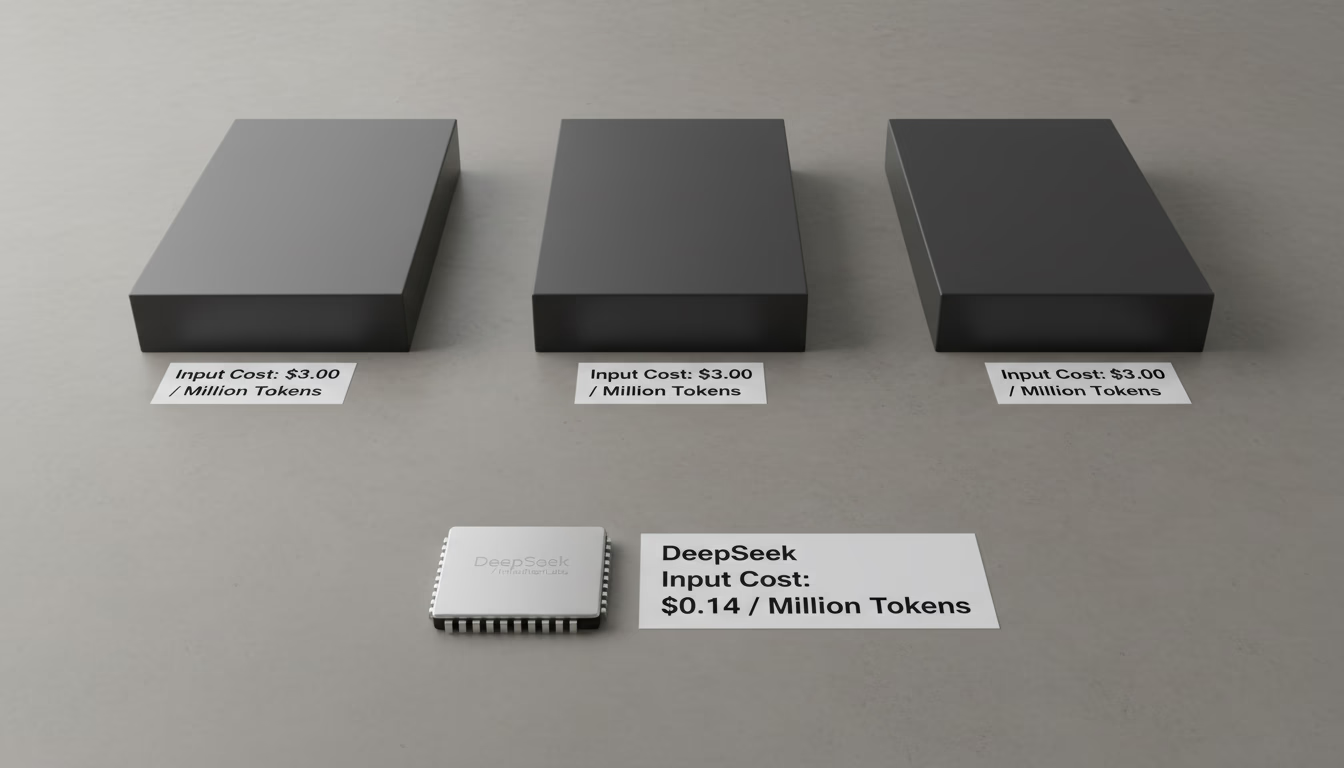

DeepSeek's Low Inference Cost Explained: MoE & Strategy

Learn why DeepSeek's AI inference is up to 50x cheaper than competitors. This analysis covers its Mixture-of-Experts (MoE) architecture and pricing strategy.

IBM Granite 4.0: A Hybrid LLM for Healthcare AI

An overview of IBM's Granite 4.0 LLM family, including its hybrid Mamba-2/Transformer architecture, Nano edge models, Granite Vision, Granite Guardian safety tools, and applications for healthcare AI and data privacy.