An analysis of the Agentic AI Foundation (AAIF) by the Linux Foundation. Understand its mission to create open standards for agentic AI and prevent vendor lock-

An analysis of the Agentic AI Foundation (AAIF) by the Linux Foundation. Understand its mission to create open standards for agentic AI and prevent vendor lock-

An in-depth guide to Mistral Large 3, the open-source MoE LLM. Learn about its architecture, 675B parameters, 256k context window, and benchmark performance.

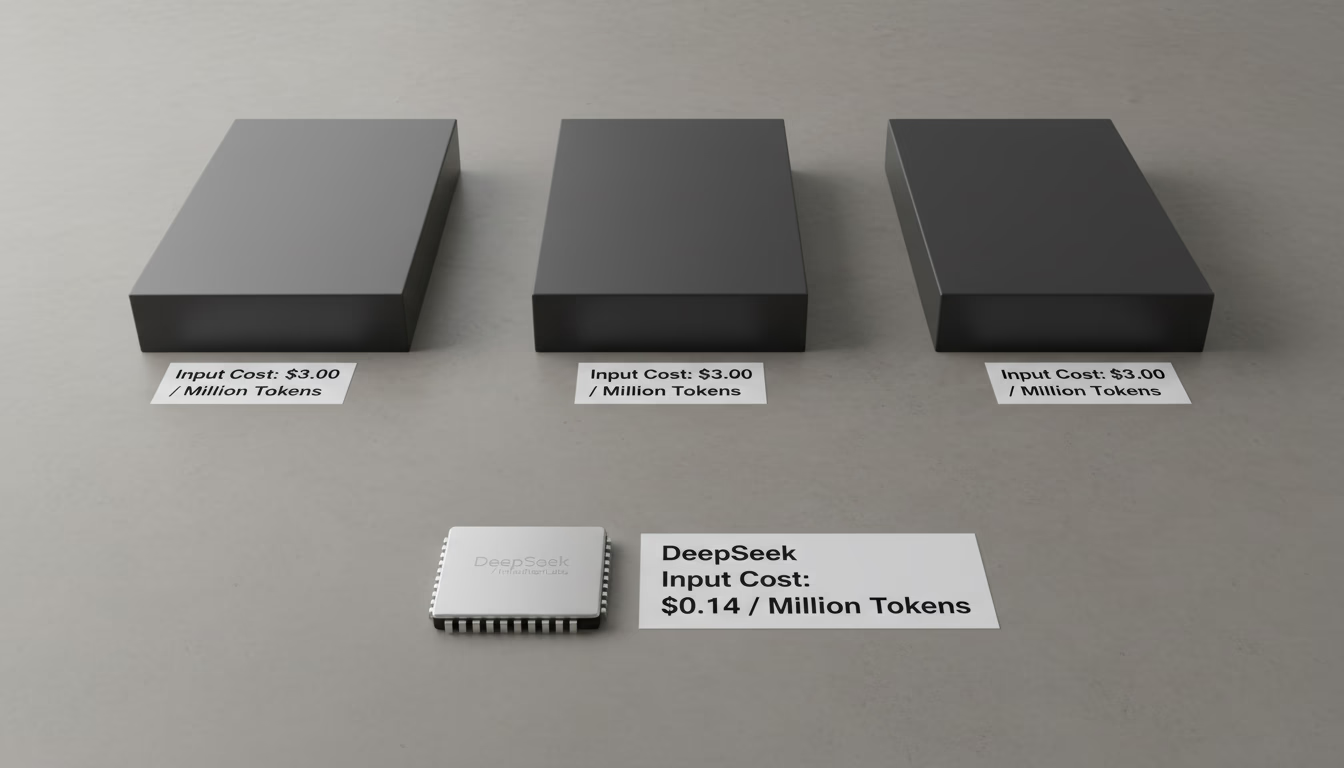

Learn why DeepSeek's AI inference is up to 50x cheaper than competitors. This analysis covers its Mixture-of-Experts (MoE) architecture and pricing strategy.

An analysis of GLM-4.6, the leading open-source coding model. Compare its benchmarks against Anthropic's Sonnet and OpenAI's GPT-5, and learn its hardware needs

An overview of IBM's Granite 4.0 LLM family, including its hybrid Mamba-2/Transformer architecture, Nano edge models, Granite Vision, Granite Guardian safety tools, and applications for healthcare AI and data privacy.

© 2026 IntuitionLabs. All rights reserved.