The EU AI Act & Pharma: Compliance Guide + Flowchart

[Revised February 26, 2026] Updated to reflect the Digital Omnibus proposal (November 2025), withdrawal of the AI Liability Directive, the GPAI Code of Practice (July 2025), EFPIA's position on pharma R&D exemptions, the revised Product Liability Directive (effective December 2026), and the proposed delay of high-risk AI deadlines to 2027–2028.

Executive Summary

The EU Artificial Intelligence (AI) Act – the world’s first comprehensive AI law – is profoundly affecting pharmaceutical companies that develop, use, or procure AI systems. Enacted in August 2024, the Act adopts a risk-based approach, categorizing AI into unacceptable, high-risk, limited-risk, and minimal-risk applications ([1]) ([2]). Unacceptable uses (e.g. illegal social scoring) are banned, while high-risk uses (including many life-science applications) face stringent requirements for transparency, data governance, risk management, human oversight, and documentation ([3]) ([4]). Limited-risk applications (e.g. simple chatbots) must observe basic transparency or notice obligations, and minimal-risk uses face only voluntary guidelines ([3]) ([5]).

For pharmaceutical firms, this framework adds a new compliance layer atop existing regulations (such as GMP, medical-device rules, and data privacy laws) ([4]) ([6]). In practice, virtually all AI systems used directly to support patient safety or treatment decisions (e.g. diagnostic algorithms, clinical decision support, patient monitoring apps) will be deemed high-risk ([7]) ([8]). These systems must undergo formal conformity assessment, stringent risk management, and ongoing post-market monitoring. AI tools used in research-only contexts (e.g. early drug discovery models) are generally exempt from AI Act obligations ([9]) ([10]). Notably, the European Federation of Pharmaceutical Industries and Associations (EFPIA) has observed that “AI-enabled tools used in pharma R&D are exempt,” whereas digital health and medical AI (even if low risk) are covered by the Act ([11]).

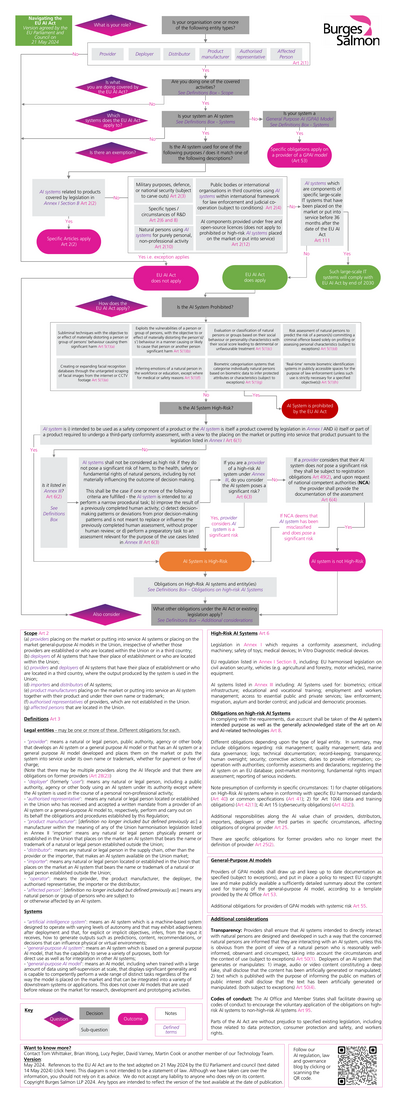

Compliance deadlines come in phases. Prohibited practices took effect on 2 February 2025, and obligations for general-purpose AI (GPAI) models (such as large language models) became binding on 2 August 2025 alongside the final GPAI Code of Practice ([12]) ([13]). Full high-risk and transparency requirements were originally set for 2 August 2026, but the European Commission's Digital Omnibus proposal (November 2025) proposes deferring Annex III high-risk AI obligations to 2 December 2027 and high-risk AI embedded in regulated products (e.g. medical devices under MDR/IVDR) to 2 August 2028, contingent on the availability of harmonized standards ([14]). These obligations – including hefty fines (up to €35 M or 7% global turnover) for breaches ([15]) ([16]) – require pharma companies to act now. Key preparatory steps include: inventorying all AI uses, classifying each by risk, integrating AI compliance into quality systems (e.g. risk management under ICH Q9), establishing governance and documentation practices, and training staff on AI literacy ([17]) ([18]). A compliance flowchart should guide decision-making (see Figure 1). Likewise, a standardized set of Standard Operating Procedures (SOPs) should be drafted, covering areas like data governance, change control for AI models, performance monitoring, and incident reporting.

This report provides a comprehensive guide to EU AI Act compliance in the pharmaceutical sector. After an introduction and historical background, we analyze the Act’s structure, obligations, and implications for pharma R&D, manufacturing, clinical trials, medical devices, marketing, and data management. We include data (e.g. market trends, investment figures) and expert perspectives, and illustrate with industry examples (Novartis, Pfizer, BMS, Sanofi). Recommended compliance processes are detailed, including flowcharts and sample SOP outlines. The report concludes with a discussion of future directions (liability rules, global impacts, regulatory sandboxes) and a set of practical recommendations. All claims are backed by authoritative sources.

Introduction and Background

Evolution of AI Regulation

Artificial intelligence – especially machine learning and generative AI (GenAI) – has revolutionized drug discovery, clinical development, manufacturing, and patient care in recent years ([19]) ([5]). Pharmaceutical R&D is increasingly data-driven: the global AI-in-pharma market reached an estimated $4.35 billion in 2025 and is projected to grow to $6.16 billion in 2026, on track toward $35 billion by 2031 (41.5% CAGR) ([20]). AI is credited with compressing development timelines and costs (the industry faces ~$2.6B outlay per new drug) ([21]). Leading companies (e.g. Novartis, Pfizer, BMS, Sanofi) have launched multibillion-dollar initiatives with AI startups and tech partners to apply advanced algorithms to molecular design, clinical trial optimization, and personalized medicine ([22]) ([23]).

These strides come with new risks and uncertainties. Late-stage trial failures, model biases, data quality gaps, and opaque “black box” algorithms have exposed critical vulnerabilities in AI use ([24]) </current_article_content>( [25]). Recognizing this, regulators worldwide are racing to establish guardrails. In Europe, the EU Artificial Intelligence Act (AI Act) emerged as a cornerstone of digital policy. First proposed by the European Commission in 2021, it was negotiated and provisionally agreed by late 2023 ([26]) ([27]). Officially adopted in 2024, it entered into force on 1 August 2024 ([28]). The EU intends the AI Act to be “risk-based”, harmonizing rules across Member States and potentially setting the global standard for AI oversight ([29]) ([30]).

European policymakers take AI governance seriously. The Council of the EU lauded the Act as “ground-breaking” in harmonizing AI rules on a risk-based principle ([29]). It follows earlier digital rulebooks like GDPR, where fundamental rights drove regulation. Notably, under the Act, harm-causing AI uses are banned; high-risk uses face rigorous controls; lower-risk uses have light-touch requirements ([3]) ([2]). The legislation also incorporates provisions on AI literacy (training staff), which entered into force in February 2025 ([31]), and establishes an EU AI Office and multi-national sandboxes to foster safe innovation ([32]) ([33]).

Impact on Pharmaceutical Sector

The pharma industry is uniquely affected. Drugs and medical devices are already among the most regulated products, and AI is now embedded in nearly every aspect of drug development. Examples include: AI algorithms that scan chemical libraries to propose new drug candidates; predictive models for patient recruitment and trial design; image-analysis software for diagnostics; robotic process automation in manufacturing; and even AI chatbots for medical information. Many of these are likely to fall under the AI Act’s high-risk category (see below). The Act’s new requirements will thus layer onto existing regimes like Good Manufacturing Practices (GMP), Medical Device Regulation (MDR), and data protection laws. The European Federation of Pharmaceutical Industries and Associations (EFPIA) has stressed that AI regulation “must be fit-for-purpose, risk-based, non-duplicative, globally aligned, and adequately tailored” ([34]).

For pharma, compliance starts with awareness. Only 9% of life-sciences professionals report understanding AI-related regulations well ([35]) – a gap given AI’s potential to add $100 billion of value to healthcare ([35]). As Leverage et al. note, firms must integrate AI risk management into their Quality Management Systems (QMS) and existing compliance programs ([36]) ([18]). The Act’s requirements – on data quality, traceability, transparency, and human oversight – often mirror GMP and ISO 13485 provisions, easing some integration but also demanding new documentation and training ([17]) ([18]).

In summary, the introduction of the EU AI Act represents a pivotal moment for pharma. It promises safer, more trustworthy AI, but also imposes significant obligations. Companies must now systematically assess each AI use case, assign risk levels, and implement matching controls. The following sections will unpack these obligations in detail and provide tools (flowcharts, SOP outlines) to achieve compliance efficiently.

The EU AI Act: Structure and Key Provisions

Risk-Based Classification

The AI Act organizes AI systems into four categories by risk level ([3]) ([2]):

-

Unacceptable Risk: AI uses that contravene EU values or fundamental rights (e.g. subliminal manipulation, social credit scoring, mass biometric surveillance) are outright prohibited ([3]) ([37]). No pharmaceutical application is expressly banned, but deployment of general-purpose AI (GPAI) for unethical profiling in healthcare could similarly be disallowed.

-

High Risk: Systems deemed high-risk face the strictest rules ([3]) ([7]). High-risk AI covers:

-

AI used as a safety component in products covered by existing EU law (Annex II). In pharma, this primarily means software as a medical device: for example, diagnostic algorithms, patient monitoring apps, therapeutic decision support, or any AI integrated into medical devices (MD) or in vitro diagnostics (IVD) requiring third-party conformity assessment ([7]) ([6]). Under the MDR/IVDR definitions, most AI used for diagnosing, treating, or monitoring patients (class IIa, IIb, III devices) is high-risk ([38]) ([6]).

-

Specific uses listed in Annex III. Relevant examples include biometric categorization (e.g. facial recognition for patient ID), eligibility determination for healthcare or insurance, and triage for emergency care ([39]) ([40]). (Notably, an AI tool that assigns patients to treatment arms or predicts disease severity arguably falls into these health-related Annex III cases).

In short, most clinical and medical AI systems in pharma are high-risk. Analytical tools that influence patient outcomes or resource allocation will require full conformity assessment ([7]) ([8]). Conversely, AI used for administrative or research tasks (e.g. drug discovery algorithms, non-clinical modeling) generally lies in a lower-risk bucket.

-

Limited Risk: AI systems with moderate risk (Appendix B in Act) get lighter requirements such as mandatory transparency (e.g. chatbots and some biometric categorization) ([3]). For pharma, this could cover internal virtual assistants, marketing chatbots, or customer support bots. These must, for example, disclose to users that “you are interacting with an AI tool” ([41]), but do not require full third-party assessments.

-

Minimal/No Risk: All other AI uses (e.g. internal document processing, supply-chain optimizations) face virtually no new regulatory obligations beyond voluntary standards. Even large language models (LLMs) like ChatGPT fall here as “general purpose AI” unless used in a high-risk context ([42]) ([3]). The Act philosophically treats these applications as safe, encouraging innovation while mandating basic data/logging transparency under its horizontal provisions ([43]) ([44]).

The classification logic can be summarized (see Figure 1). Organizations first determine whether their AI system triggers existing sector rules (like being medical device software) or falls into an Annex III use case. If so, it is deemed high-risk ([38]). If not, they ask whether it complies with minimal transparency rules (if limited risk) or is out of scope (minimal risk).

| Risk Category | Coverage | Pharma Examples | Key Obligations |

|---|---|---|---|

| Unacceptable | AI violating fundamental rights or EU values ([3]) (e.g. manipulation, social scoring) | None specific to pharma (unlikely to apply) | Prohibited outright ([3]) |

| High Risk | Software as Medical Devices/IVDs (MDR/IVDR), or Annex III uses ([38]) ([3]) | Diagnostic algorithms, AI in patient monitoring, trial recruitment (health triage) ([7]) ([40]) | Full conformity assessment (Annex IV), risk management, validated datasets, transparency (Annex III/Articles 10-15) ([4]) ([18]) |

| Limited Risk | Specified uses requiring transparency ([3]) (e.g. chatbots, biometric categorization) | Customer-facing chatbots, content generators, certain analytic tools | Disclose AI use (Article 52); adhere to transparency logging (Article 13) ([41]) |

| Minimal Risk | All other AI systems | Basic R&D models, administrative analytics | No mandatory AI Act requirements (but voluntary ethics standards encouraged) ([45]) |

Table 1: EU AI Act risk categories, illustrative pharma use cases, and general obligations. (Sources: EU AI Act text and analyses ([38]) ([3]).)

Key Obligations for High-Risk Systems

Pharma companies should assume that any AI system used in clinical or manufacturing operations that affects health will trigger high-risk compliance ([7]) ([4]). The following summarizes core obligations (many of which must be documented in quality records):

-

Risk Management System (Article 9): A systematic, continuous process to identify and mitigate risks from AI logic and data ([46]). Companies must perform thorough risk assessments throughout the AI lifecycle, including identifying biases, privacy impacts, and failure modes ([46]) ([17]). For example, pharmaceutical firms should integrate AI risk evaluation into their QMS/ICH Q9 frameworks ([17]). Procedures must be in place to handle incidents (e.g. model errors triggering patient harm) and to revert to manual controls if thresholds are exceeded ([47]).

-

Data Governance and Quality (Article 10): High-risk AI must be trained and tested on high-quality data. This means ensuring data sets are accurate, representative, and traceable ([3]) ([18]). Electronic records must comply with 21 CFR Part 11 and EU Annex 11 standards for audit trails and integrity ([48]). In practice, pharma must document data provenance (source, cleansing methods), control for biases (e.g. ensuring clinical trial data doesn’t underrepresent subpopulations), and maintain immutable logs of AI inputs/outputs ([49]) ([46]).

-

Technical Documentation (Article 11 & Annex IV): A comprehensive technical file is required, akin to medical device documentation. This file must describe the system’s purpose, architecture, data handling, validation results, risk management plan, human oversight measures, and instructions for use ([50]) ([51]). Crucially, pharma companies must label their AI systems with a unique identifier and provide instructions on intended use, limitations, and proper operation ([18]) ([52]). All design choices, assumptions, and testing protocols must be recorded. Many of these details overlap with existing medical device technical documentation (e.g. MDR software files), but under the AI Act they must explicitly address AI-specific features (like model adaptivity, “self-learning” behaviors) ([50]) ([6]).

-

Transparency and Provision of Information (Articles 12-15): High-risk AI users must keep logs to ensure questions or incidents can be traced ([18]). Patients or healthcare professionals affected by AI decisions have a right to an explanation. For example, if an AI system recommends a treatment, companies should be able to explain the data and reasoning behind that output ([53]). In clinical contexts, regulators expect “continuous monitoring” of AI model performance and periodic reporting of any serious malfunctions or biases ([54]) ([55]). Moreover, instructions must clarify the required level of human oversight and the expertise needed to supervise the AI ([56]) ([57]).

-

Human Oversight (Article 14): Pharma AIs must be designed for a defined human role in the loop. For example, clinicians must be able to overrule AI diagnoses, and operators of a manufacturing AI must understand its outputs before acting ([6]) ([58]). Standard operating procedures should specify who monitors the AI and how to intervene if errors or atypical outputs occur.

-

Accuracy, Robustness, and Security (Article 15): High-risk AI must meet strict performance criteria. For medical AI, this entails rigorous validation against clinical gold standards ([59]). Models should be robust to noise and adversarial attacks, as judged by testing under varied conditions. Cybersecurity measures are required to protect AI systems from tampering ([18]). Companies must maintain cybersecurity protocols commensurate with those for other critical software (e.g. encryption, access controls, as emphasized under GDPR and GMP).

-

Audit & Certification: Many high-risk AI systems will require conformity assessment. If an AI is itself a medical device, this assessment will be done by a notified body as part of CE marking ([6]). Otherwise, for stand-alone high-risk AI (like a medical triage tool not yet covered by device legislation), the provider must arrange an independent evaluation (internal or external) demonstrating compliance with the Act’s requirements (the national regulators will publish conformity procedures). All assessment results must be appended to the technical documentation.

Table 2 below summarizes these obligations and typical pharma controls. In all cases, companies should integrate AI Act processes into existing compliance channels (e.g. Lean QMS, GMP change control, design reviews). Quality units should explicitly include “AI model modification” in their change-control protocols and treat AI software defects as “non-conformances” requiring corrective action plans.

| Obligation Category | Requirements & Examples in Pharma | Control Measures/Documentation |

|---|---|---|

| Risk Management (Art.9) | Continual risk analysis of AI impact on patients/products | Maintain risk register; include AI-specific risks (algorithmic bias, data drift) in QMS ([46]) ([17]); SOPs for incident response. |

| Data Governance (Art.10) | High-quality training/test data; GDPR-aligned processing ([60]) | Data lineage records, Part 11 audit trails, data access policies; bias-mitigation methodology (e.g. diverse datasets) ([46]) ([60]). |

| Documentation (Art.11 & Annex IV) | Complete technical file covering design, use, validation ([18]) | Documents within QMS: Intended Use, System Architecture, Validation Reports, Clinical Performance Data, User Manuals ([18]). |

| Transparency (Art.13) | Logging of AI outputs; explanation of decisions for patients | System logs; version control; documentation of explanation processes; informed consent forms noting AI use. |

| Human Oversight (Art.14) | Clear human roles; overrides; training requirements | SOPs detailing personnel responsibilities; training records; human-in-loop controls. |

| Accuracy & Security (Art.15) | Performance standards; resilience; cybersecurity | Validation studies (sensitivity/specificity analyses); penetration tests; encryption, user auth systems. |

| Conformity Assessment | Third-party or internal review of high-risk AI | Notified body audit for MD software; or internal AI audit by QA; registrar approval. Documentation of assessment is kept on file. |

Table 2: Key compliance obligations under the AI Act for high-risk AI and corresponding controls in pharmaceutical operations (Sources: EU AI Act Articles and expert guidance ([18]) ([46])).

Prohibited Practices and Other Provisions

While no routine pharma use is completely banned, companies must avoid unacceptable practices. The AI Act forbids any AI that manipulates subject behavior or infringes on rights (e.g. predictive scoring to deny treatment, biometric ID of patients without consent) ([3]). Decision-makers should screen for these risks; any AI flagged “unacceptable” must not be deployed.

Additionally, transparency obligations apply to certain limited-risk tools. For instance, if a pharmaceutical sales representative uses AI-generated images or text, EU law will soon require clear disclosure that the content is AI-created (an upcoming measure akin to the digital services regulation) ([41]). Though outside the core AI Act, companies must stay alert to related obligations (e.g. Europe’s “deepfake” labeling rules).

Finally, the Act contains special rules for general-purpose AI (GPAI) models (large pretrained LLMs and foundation models). These obligations became applicable on 2 August 2025, accompanied by the final GPAI Code of Practice published by the EU AI Office on 10 July 2025. The Code – developed through a multi-stakeholder process with nearly 1,000 participants – is organized into three chapters: Transparency, Copyright, and Safety & Security. Providers of GPAI models (e.g. OpenAI, Google, Anthropic, Meta) must comply with transparency and copyright obligations; those with models posing “systemic risk” face additional safety requirements. Providers of GPAI models placed on the market before 2 August 2025 have until 2 August 2027 to bring models into compliance ([43]). Downstream users (like pharma using an LLM for internal purposes) generally have only transparency duties under the general framework. Nevertheless, companies deploying LLMs should monitor guidance, as misuse (for research hallucinations or data privacy breaches) could trigger liability under the revised Product Liability Directive (effective December 2026), which now explicitly treats software and AI systems as “products” subject to strict liability.

Implementation Timeline

The AI Act’s provisions come into effect in stages ([12]) ([13]):

- 1 Aug 2024: Act entered into force. ✅

- 2 Feb 2025: Ban on prohibited AI practices (Article 5) became binding, and AI literacy obligations (training) also took effect ([12]) ([31]). ✅

- 2 Aug 2025: Obligations for General-Purpose AI (GPAI) models became applicable, accompanied by the final GPAI Code of Practice published by the AI Office on 10 July 2025. Member States were required to designate national competent authorities and adopt national laws on penalties. The EU AI Board, Scientific Panel, and Advisory Forum were established ([12]). ✅

- 2 Aug 2026: Originally set for all high-risk AI systems (Annex III) to take full effect, along with broader transparency obligations (Article 50) and Commission enforcement powers. Member States must also have at least one AI regulatory sandbox operational by this date.

- ⚠️ Digital Omnibus Proposal (Nov 2025): The European Commission proposed delaying high-risk AI deadlines, linking them to the availability of harmonized standards. Under this proposal, Annex III high-risk AI rules would apply from 2 December 2027 (16-month delay), while high-risk AI embedded in regulated products (MDR/IVDR) would apply from 2 August 2028 (24-month delay) ([14]) ([61]). This proposal is under legislative review and not yet finalized.

- 2 Aug 2027: Compliance deadline for GPAI models placed on the market before 2 August 2025; also the original deadline for high-risk AI in regulated products (Annex I/II) ([13]).

Pharmaceutical firms must track this evolving schedule carefully. The EU AI Office was established in 2024 and is now operational, coordinating cross-border enforcement and overseeing GPAI model compliance. Spain’s AI watchdog (AESIA) released 16 compliance guides in February 2026 from its pilot sandbox program, providing early practical guidance. Companies should have internal governance (AI risk committees, guidelines) in place now, and should use the extended timeline (if adopted) to refine system audits and CE marking adjustments rather than delay preparations.

Compliance Workflow: Decision Flowchart

To operationalize the above, we propose a compliance flowchart (schematic below) that the lead AI project manager or regulatory officer can use to triage AI systems:

-

Identify AI System: Does the digital tool meet the EU’s definition of AI (i.e. uses machine learning/analytics to influence outputs autonomously)? If no, the Act does not apply; maintain normal best practices. If yes, proceed.

-

Check for Exemptions: Is the AI system used solely for R&D and kept within non-clinical contexts? Many pharma R&D tools (e.g. early drug design) may be exempt from stringent rules ([9]) ([10]). If exempt, focus on voluntary principles (see SOP notes); otherwise, continue as below.

-

Determine Risk Category:

- Banned? If the AI falls under any of the prohibited categories (e.g. it uses biometric surveillance of patients without consent), it cannot proceed ([3]).

- High-Risk? Check if the AI is part of a regulated device/software (MDR/IVDR) or in an Annex III use case. If so, classify as High Risk ([38]) ([6]).

- Limited-Risk? If not high-risk but triggers transparency obligations (e.g. generative content tools, certain biometric functions), mark as Limited Risk.

- Minimal: Otherwise, the system is minimal-risk.

- Apply Controls Accordingly:

- Unacceptable: Redesign the approach or cancel the project.

- High-Risk: Initiate conformity assessment process: allocate responsibility (provider vs user), set up risk management plan (Art.9), data governance plan (Art.10), and technical documentation. Engage competent authorities if needed. Plan for CE marking changes if MD.

- Limited-Risk: Ensure transparency notices (e.g. disclaimers that “AI was used” in any output). Document AI README files explaining the use.

- Minimal-Risk: No new legal steps required, but adopt internal best practices (data ethics, model validation guidelines) to avoid future liability.

-

Internal Approvals & Training: Regardless of category, any AI deployment should go through a compliance review board (involving Legal, Regulatory, IT, Data Science). Employees working with AI must be trained on the Act’s basics ([31]) ([18]).

-

Documentation and Audit: Maintain records of the above decisions, risk analyses, tests, and training. Update this assessment whenever the AI’s intended use or algorithm is significantly changed (which under the Act may trigger a new conformity review) ([18]).

Figure 1: EU AI Act Compliance Flowchart. Click to view full size.

Source: Burges Salmon LLP - "Navigating the EU AI Act". Used for educational purposes. We are not the authors of this flowchart.

Standard Operating Procedures (SOP) Starter Kit

Pharmaceutical companies should translate the flowchart into actionable SOPs. The following outline describes key SOP modules; each should reference internal documents (e.g. quality manuals) and be customized for company structure.

- AI Inventory SOP:

- Objective: Systematically identify all AI systems (existing and planned) in the company’s scope.

- Steps: Maintain a register of AI systems, noting purpose, development status, data used, and deployer (R&D group, manufacturing, etc.). Review this register quarterly.

- Responsibility: Data Governance or Digital Strategy office, in coordination with IT and department heads.

- Risk Classification SOP:

- Objective: Assign each AI system to an EU AI Act risk category.

- Steps: For each AI in the register, answer criteria per Table 1. Document the classification rationale. For borderline cases, consult legal/regulatory affairs.

- Records: Classification forms should be stored in the QMS. If classified as high-risk, automatically generate a project folder for compliance documentation.

- Quality & Data Governance SOP (Art.10):

- Objective: Ensure training/validation data quality and traceability.

- Actions: Implement data management plans: unique identifiers for datasets, lineage tracking, provenance logs. Data used to train AI must be checked for accuracy and bias.

- Controls: Incorporate Part 11/GMP Annex 11 controls for electronic records, including access controls and audit trails ([48]).

- Outputs: Data Quality Reports; data stewardship assignments; formal validation of data pipelines.

- Risk Management SOP (Art.9):

- Objective: Institute continuous risk analysis for AIs.

- Procedures: Use ICH Q9 framework adapted for AI. At introduction of any high-risk AI, perform a preliminary hazard analysis. Update risk log whenever model outputs cause any deviation or if underlying data changes.

- Documentation: Risk assessment forms with likelihood and impact scores; classes of risk (e.g. patient safety, privacy); mitigation plans.

- Review: Monthly or every release, whichever sooner.

- Technical Documentation SOP (Art.11 & Annex IV):

- Objective: Compile and maintain a technical file for each high-risk AI.

- Contents: Implementation of items in Table 2: system description, architecture, algorithms, intended use, risk assessments, validation and test results, cybersecurity measures, user instructions (with human oversight requirements), and incident logs.

- Maintenance: Document must be regularly updated, e.g. for each software release. All versions must be archived as per QMS.

- Change Control SOP:

- Objective: Control modifications to AI systems.

- Process: Any change to model architecture, training data, or intended use triggers a change request. The request must be reviewed for its impact on risk classification. Significant changes for high-risk AI require re-assessment under the Act.

- Verification: Test results for new version, updated risk analysis, sign-off by compliance officer.

- Incident Reporting SOP:

- Objective: Handle AI malfunctions and near-misses.

- Actions: Define metrics for “serious incidents” (e.g. patient harm, security breach, data corruption). All significant incidents with AI must be reported internally and to authorities (as per EU or national rules). Maintain logs for external audits.

- Template: Incident report form with timeline, impact assessment, root cause.

- Training and AI Literacy SOP:

- Objective: Ensure personnel understand AI compliance requirements.

- Content: Mandatory training modules on basics of AI Act, data ethics, and company AI policies. Special sessions for AI developers on documentation and validation standards.

- Records: Certification of attendance; refresher courses at least annually.

- Vendor and Outsourcing SOP:

- Objective: Manage third-party AI procurement.

- Procurement clause: Contracts for AI (software/services) must include clauses ensuring the vendor’s compliance with EU AI Act.

- Due Diligence: Before adopting external AI tools, verify providers’ compliance (e.g. that their AI models have conformity declarations).

- Monitoring and Audit SOP:

- Objective: Periodically audit AI compliance and governance.

- Procedure: Internal audits at least annually, covering all SOPs above, current classifications, and documentation completeness. External audits may follow after introduction of complex AI.

- Audit Trail: Maintain checklist of regulatory points (e.g. GCNL-ready by Aug 2025, per [59†L40-L42]) and track readiness.

These SOPs form a starter kit. Firms should refine each to align with their size and structure. For instance, a small biotech might combine some steps (e.g. risk classification done by CTO) whereas a large pharma would have dedicated AI governance teams. The key is to document each step.

Pharma-Specific Considerations

While the general framework above applies to all industries, the pharmaceutical context raises special points:

-

Integration with Medical Device Regulation (MDR/IVDR): If an AI application also qualifies as a medical device by EU law (e.g. a diagnostic app or AI-driven test kit), it already follows MDR conformity assessment. In practice, this means the AI Act’s requirements must be folded into the MDR processes ([6]) ([38]). For example, CE-marking a device will now include certification that the embedded AI meets AI Act criteria. Thus, medical-device quality engineers should update templates (e.g. Device Master Records) to incorporate AI Act checklists (risk analysis, human oversight plan, etc.) ([6]) ([17]). Conversely, non-device AIs (e.g. an AI used for internal process monitoring) must get treated in parallel via the AI Act framework.

-

R&D Exemption Nuance: The Act explicitly states that AI tools used solely in research and development of medicinal products are exempt under Articles 2(6) and 2(8) ([9]). EFPIA has reinforced this position, arguing that "AI-enabled tools developed solely for medicines R&D are exempt from the requirements of the EU AI Act" and that even where exemption doesn't apply, most pharma R&D tools would not qualify as high-risk under the Annex I/III frameworks ([62]). However, this exemption is narrow: once an R&D tool is used in a clinical phase (e.g. selecting trial endpoints), it may lose the exemption. Pharmaceutical companies are among those eagerly awaiting Commission guidelines clarifying the boundaries of high-risk classification, stemming from a recent targeted stakeholder consultation ([63]). Companies should carefully designate when an AI moves from research to regulated development, and apply SOPs accordingly.

-

Clinical Trials: AI is increasingly used in trials (from patient matching to virtual cohorts). The Act revitalizes obligations to ensure trial AI is high-quality. For example, synthetic control arms (simulated patient groups generated by AI) are likely high-risk due to their impact on safety and efficacy decisions ([64]). Sponsors should treat these algorithms like significant new decision tools: document them fully, validate against real data, and include human researchers in the loop ([64]) ([53]). Informed consent forms may need to note AI involvement if patient data or decision-making is affected.

-

Manufacturing: AI is used in process optimization and quality control in pharma manufacturing (e.g. anomaly detection on production lines). These uses typically fall outside “medical purpose,” but nonetheless can affect product quality. Firms should consider them under high-risk if they are safety-critical (e.g. an AI that decides when a bioreactor batch is out-of-spec). At minimum, robust validation and oversight are needed, and these AIs should undergo internal verification similar to other process systems. Documenting these sterilization or stability-prediction models aligns with good manufacturing practice.

-

Marketing and Administration: AI in marketing (personalized advertising, content creation) generally is low risk under the Act, but may still be subject to future labeling rules (e.g. the Spanish law requiring “AI-generated content” labels ). Administrative chatbots and assistants (e.g. answering physician queries) should follow limited-risk rules: they must inform users of the AI nature and verify outputs. Companies can mitigate compliance work by differentiating tools: classify internal-only automation (no patient involvement) as minimal-risk and focus resources on patient-facing AI.

-

Cross-border and Exterritorial Effects: Non-EU affiliates of a global pharma must comply if their AI systems are used in EU-regulated activities. The Act binds providers and users worldwide if the deployment affects the EU market ([65]) ([66]). Accordingly, multinational companies should consider establishing an EU representative or local legal entity to liaise with EU authorities ([66]). Post-Brexit, UK-based AI still must obey EU rules for use in Europe; fortunately, UK regulators (MHRA) are aligning their approach (e.g. the “AI Airlock” sandbox ([67])).

Data and Market Analysis

Pharma’s shift to AI is underscored by strong market trends. The global AI-in-pharma market was estimated at $4.35 billion in 2025 and is projected to reach $6.16 billion in 2026, growing toward $35 billion by 2031 (41.5% CAGR). The broader AI in pharma and biotech market was valued at $6.63 billion in 2025 and is expected to reach $154 billion by 2034 (43.6% CAGR) ([20]) ([68]). Investment drivers include reduced discovery timelines, improved predictive accuracy, and regulatory impetus (e.g., agencies opening ”AI sandboxes” to de-risk innovation) ([69]). Indeed, strategic alliances abound: Bristol-Myers Squibb’s $674M tie-up with VantAI and Sanofi’s collaboration with OpenAI signal that AI is now core to R&D pipelines ([22]).

However, adoption is uneven. Surveys indicate substantial uncertainties: only 9% of pharma professionals feel well-versed in AI regulations ([35]). Smaller biotech firms often lead in agility, embedding compliance quickly, whereas larger legacy companies have more friction in evolving data governance ([70]). Manufacturers are concerned about the complexity of aligning AI Act requirements with GMP: for example, integrating “AI change control” into existing versioning processes still lacks standardized guidance ([70]) ([52]).

Stakeholders also note only partial harmonization with global regimes. While the EU Act is pioneering, analogous efforts continue globally (e.g. evolving US FDA AI/ML guidance, the UK's pro-innovation AI framework, China's AI regulations, and interoperability with GDPR). The first harmonized standard for AI – prEN 18286 (AI Quality Management System) – entered public enquiry on 30 October 2025, providing a product-focused framework for AI lifecycle governance under Article 17. Pharma companies therefore must prepare not only for EU law but for a patchwork of AI rules worldwide ([71]) ([72]).

Case Examples and Expert Views

Expert Perspectives: Industry analysts warn that vague definitions in the Act could burden innovation ([73]) ([17]). For instance, Altimetrik’s Vikas Krishan notes the broad, evolving AI definition may force firms to rehearse compliance for many systems (e.g., predictive analytics in trials) that are likely “high risk” ([5]). He, along with other commentators, emphasizes the need for harmonized global standards (EU vs. US vs. UK) to avoid fragmentation ([71]). In contrast, others (e.g. IFPMA, EFPIA) view the Act as a “clear message” boosting trust in AI, urging firms to see compliance as an opportunity to strengthen data governance and patient safety ([18]) ([17]).

Industry Collaboration: Recognizing the compliance challenges, regulatory sandboxes are being established across Member States. The Act mandates each Member State set up at least one AI regulatory sandbox by August 2026 ([32]). Spain’s AESIA has led the way with its pilot sandbox program, releasing 16 detailed compliance guides in February 2026. Pharma companies can apply to these controlled environments (e.g. France’s CNIL/HAS healthcare-specific AI guidance, Spain’s sandbox) to test new AI tools under supervision ([52]). Larger companies (Sanofi, Roche, etc.) are already teaming with smaller biotechs to jointly pilot AI innovations, combining agility with resources ([74]). For example, the US-based Novartis real-life AI implementations (drug target identification via deep learning) and Pfizer’s use of AI in trial design have demonstrated that, when managed correctly, AI can slash development phases without compromising compliance ([23]).

Case Scenarios:

-

Case 1: Digital Pathology AI. A European hospital biotech develops software that uses deep learning to analyze biopsy images. This AI is regulated as a Class IIa device under the MDR, so under the AI Act it is high-risk. The company updates its CE marking process: it conducts an Annex IV conformity assessment specifically for the AI component, documenting model training data, validation accuracy, and post-market surveillance plan. A human pathologist always reviews AI outputs (Article 14), and logs of cases are kept for audit (Article 61 data logging). This conforms with EU rules on medical-device AI ([38]) ([6]).

-

Case 2: AI in Drug Discovery. A large pharma uses an in-house machine-learning tool to suggest novel molecules. Since this is purely R&D (no immediate patient use) and “scientific research” is exempt, the AI Act imposes no formal obligations ([9]). The firm nonetheless follows internal quality guidelines: it validates the model on known active compounds and documents the results under its QMS (anticipating future regulations like FDA’s guidance on AI/ML in drug development ([72])). It proactively trains chemists on data bias and holds an internal audit of the AI pipeline annually.

-

Case 3: Virtual Clinical Recruiter. A CRO deploys an AI tool that screens electronic health records to match patients to oncology trials. This qualifies as high-risk annex III (healthcare eligibility decision). The CRO’s SOP requires a conformity check: they review the model for privacy (GDPR compliance), validate it on retrospective data (Article 15 requirements), and log all recruitment decisions made in part by the AI. Patients are informed in consent forms that an algorithm aids selection (Article 52 transparency). By 2026, the system will be formally registered with EU regulators as a high-risk health AI tool.

These scenarios illustrate that while the AI Act does not alter core scientific and regulatory standards (safety, efficacy, data quality), it mandates processual compliance steps. Pharma entities must build internal processes to ensure these steps occur, not just assume “business as usual.”

Implications and Future Directions

Enforcement and Liability

EU enforcement will involve national AI authorities and the EU AI Office, which is now fully operational. Member States were required to designate national competent authorities by August 2025, and the EU AI Board, Scientific Panel, and Advisory Forum have been established. The AI Office coordinates cross-border issues and oversees GPAI model compliance, with enforcement powers applicable from 2 August 2026 ([75]). Early enforcement has focused on prohibited practices (in force since February 2025) and GPAI transparency obligations (since August 2025) ([13]).

On the liability front, the AI Liability Directive was withdrawn by the European Commission in February 2025 (formally scrapped in October 2025), citing a lack of legislative consensus ([76]). However, the revised Product Liability Directive (Directive 2024/2853) now explicitly treats software and AI systems as "products" subject to strict liability, with application from 9 December 2026 ([77]). A key innovation is the introduction of a presumption of defect in cases of regulatory non-compliance: if an AI system breaches mandatory AI Act requirements, this facilitates the claimant's burden of proof. For pharma, this means that if an AI-driven product (say, a diagnostic program) injures someone, the producer faces strict liability where non-compliance with the AI Act can be used as evidence of defectiveness. Documentation and audit trails (as mandated by the AI Act) become crucial evidence of due diligence.

Strategic and Competitive Effects

While compliance imposes costs, many experts view it as a competitive edge in the long run. The Act explicitly encourages the EU to become a global AI leader by building trust ([26]) ([30]). Early adopter companies can market their AI tools as “Act-compliant”, signaling safety to patients and partners. Moreover, robust AI governance often aligns with better general data practices. For example, systematic bias checks not only satisfy Article 10 but also improve drug trial demographics.

However, concerns remain. Some stakeholders worry that the burden of dual regulation (AI Act + healthcare laws) could slow innovation ([78]) ([17]). For instance, small biotech firms note that complex compliance processes require specialized legal/tech expertise ([70]) ([79]). In response, the Commission’s AI Pact initiative has encouraged voluntary early adoption of a compliance mindset, and the Digital Omnibus proposal (November 2025) would extend high-risk compliance deadlines – a signal that the Commission recognizes the need for additional time and support tools, including harmonized standards and practical guidelines ([80]).

Global and Future Outlook

The EU AI Act sets a precedent. Other economies (US, UK, China) are developing their own AI regulations, and the Act’s approach informs these debates. Pharmaceutical multinationals will likely strive for “one-fits-all” compliance, leveraging EU standards as a model. Indeed, the Act’s extraterritorial scope means that any AI system placed on the EU market – whether made in Bangalore or Boston – must meet its requirements ([65]) ([66]).

Pharma companies should thus anticipate a future where AI regulatory alignment is expected. Initiatives like the International Coalition of Medicine Regulatory Authorities (ICMRA) or ISO/IEC standard-setting may incorporate AI Act principles. R&D teams should design studies with regulatory scrutiny in mind (e.g. adopting explainable AI methods from the outset).

Finally, innovation continues. Foundation models (e.g. open-domain LLMs) are catalyzing new therapeutic approaches (e.g. protein design, medico-scientific text mining) ([81]). With the GPAI Code of Practice now in place and the first harmonized standard (prEN 18286) in public enquiry, the compliance landscape is rapidly materializing. Pharma firms using foundation models must monitor provider disclosures and ensure their downstream use complies with transparency requirements. Meanwhile, constant vigilance on emerging tech (quantum AI, agentic AI, neuro-interfaces) will be needed, as the Act foresees further adjustments and the Digital Omnibus proposal signals continued regulatory evolution.

Conclusion

The EU AI Act introduces transformative rules that will reshape how pharmaceutical companies manage AI. Achieving compliance demands thorough understanding, organization-wide governance, and proactive planning. This report has detailed the Act’s core components, timeline, and the specific implications for pharma – from R&D and clinical trials to manufacturing and marketing. We have outlined practical steps: a compliance flowchart, tables linking obligations to Pharma use cases, and an SOP framework to operationalize the law.

Moving forward, pharma firms should act now – even if the Digital Omnibus proposal extends high-risk deadlines to 2027–2028, using the additional time to build robust compliance infrastructure rather than delay. Key priorities include: assessing AI inventories, launching training programs (AI literacy is already mandatory since February 2025), monitoring the evolving harmonized standards (starting with prEN 18286), and consulting experts on adapting SOPs. Combining legal compliance with robust ethics will not only avoid hefty fines ([15]) but also build patient trust and sustain innovation. With the revised Product Liability Directive (effective December 2026) now treating AI as a product subject to strict liability, the incentive to demonstrate AI Act compliance has never been stronger. By integrating AI Act requirements into existing quality and risk systems, the industry can turn regulatory challenge into a driver of excellence. In the era where AI holds immense promise for drug development and patient care, a well-prepared pharma organization will navigate the new regulations to deliver both safe products and innovative solutions in tandem.

References: Authoritative sources were used throughout, as indicated in the text. Key references include EU Commission releases and legislative text ([28]) ([82]), industry analyses ([26]) ([4]), and expert commentaries ([5]) ([18]), among others. These provide the factual basis for all statements above.

External Sources (82)

Need Expert Guidance on This Topic?

Let's discuss how IntuitionLabs can help you navigate the challenges covered in this article.

I'm Adrien Laurent, Founder & CEO of IntuitionLabs. With 25+ years of experience in enterprise software development, I specialize in creating custom AI solutions for the pharmaceutical and life science industries.

DISCLAIMER

The information contained in this document is provided for educational and informational purposes only. We make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability, or availability of the information contained herein. Any reliance you place on such information is strictly at your own risk. In no event will IntuitionLabs.ai or its representatives be liable for any loss or damage including without limitation, indirect or consequential loss or damage, or any loss or damage whatsoever arising from the use of information presented in this document. This document may contain content generated with the assistance of artificial intelligence technologies. AI-generated content may contain errors, omissions, or inaccuracies. Readers are advised to independently verify any critical information before acting upon it. All product names, logos, brands, trademarks, and registered trademarks mentioned in this document are the property of their respective owners. All company, product, and service names used in this document are for identification purposes only. Use of these names, logos, trademarks, and brands does not imply endorsement by the respective trademark holders. IntuitionLabs.ai is an AI software development company specializing in helping life-science companies implement and leverage artificial intelligence solutions. Founded in 2023 by Adrien Laurent and based in San Jose, California. This document does not constitute professional or legal advice. For specific guidance related to your business needs, please consult with appropriate qualified professionals.

Related Articles

EU MDR & AI Act Compliance for AI Medical Devices

Understand EU MDR and AI Act compliance for AI medical devices. Explains classification, conformity assessment, and technical documentation requirements.

21 CFR Part 11 for AI Systems: An FDA Compliance Guide

Navigate FDA 21 CFR Part 11 for AI systems. This article details compliance strategies for validation, audit trails, and data integrity in regulated GxP setting

The Critical Role of Data Quality and Data Culture in Successful AI Solutions for Pharma

A comprehensive analysis of how data quality and data culture are foundational to AI success in pharmaceutical and life sciences organizations, covering assessment frameworks, governance models, regulatory compliance, and practical implementation roadmaps.